avaudioengine

Asked on 2024-08-12

1 search

The context provided from the WWDC sessions primarily discusses the use of audio in spatial computing apps with RealityKit and the support for real-time machine learning inference on the CPU.

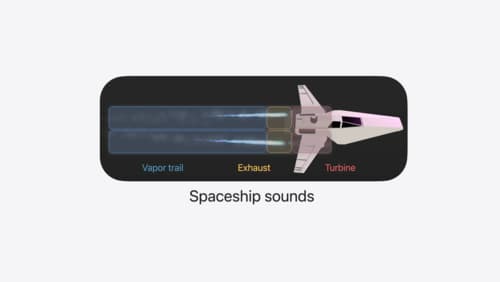

In the session titled "Enhance your spatial computing app with RealityKit audio," there is a focus on configuring audio components for spatial rendering, using custom audio units, and managing audio playback with controllers. The session also covers how to use audio mix groups to control different sound categories in an app, allowing for a tailored audio experience.

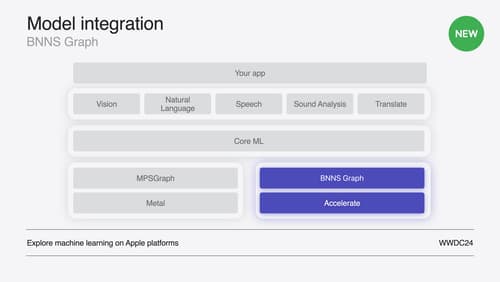

In the session "Support real-time ML inference on the CPU," the discussion includes using BNNS Graph for real-time audio processing, creating audio units that can modify audio data, and ensuring performance efficiency by avoiding memory allocations during execution.

If you are interested in learning more about how to enhance spatial computing apps with audio or how to implement real-time audio processing with machine learning, these sessions provide valuable insights and practical examples.

Support real-time ML inference on the CPU

Discover how you can use BNNSGraph to accelerate the execution of your machine learning model on the CPU. We will show you how to use BNNSGraph to compile and execute a machine learning model on the CPU and share how it provides real-time guarantees such as no runtime memory allocation and single-threaded running for audio or signal processing models.

Enhance your spatial computing app with RealityKit audio

Elevate your spatial computing experience using RealityKit audio. Discover how spatial audio can make your 3D immersive experiences come to life. From ambient audio, reverb, to real-time procedural audio that can add character to your 3D content, learn how RealityKit audio APIs can help make your app more engaging.