Gesture recognizers

Asked on 2024-08-12

2 searches

Gesture recognizers were discussed in several sessions at WWDC 2024, particularly focusing on their use in visionOS and UIKit.

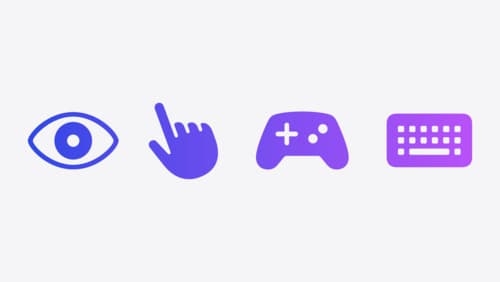

In the session titled "Explore game input in visionOS," various system gestures such as tap, double tap, pinch and hold, and pinch and drag are explored. These gestures can be used to interact with objects in a game environment, allowing for actions like zooming, rotating, and controlling objects with hand movements. The session also covers how to combine system gestures for more complex interactions and how to create custom gestures using full hand skeleton tracking through ARKit. For more details, you can refer to the Explore game input in visionOS session.

In the session "What’s new in UIKit," the integration of gesture recognizers between UIKit and SwiftUI is discussed. iOS 18 introduces the ability to add existing UIKit gesture recognizers directly to SwiftUI hierarchies using the new UIJs recognizer representable protocol. This session also covers how to coordinate gestures across both frameworks, ensuring seamless interaction between single and double tap gestures. For more information, see the What’s new in UIKit session.

These sessions provide a comprehensive overview of how gesture recognizers can be utilized and coordinated across different platforms and frameworks in the Apple ecosystem.

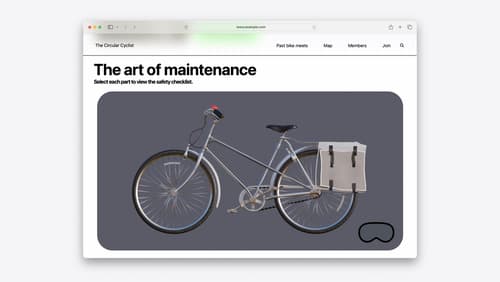

Build immersive web experiences with WebXR

Discover how WebXR empowers you to add fully immersive experiences to your website in visionOS. Find out how to build WebXR experiences that take full advantage of the input capabilities of visionOS, and learn how you can use Simulator to test WebXR experiences on macOS.

Optimize for the spatial web

Discover how to make the most of visionOS capabilities on the web. Explore recent updates like improvements to selection highlighting, and the ability to present spatial photos and panorama images in fullscreen. Learn to take advantage of existing web standards for dictation and text-to-speech with WebSpeech, spatial soundscapes with WebAudio, and immersive experiences with WebXR.

Explore game input in visionOS

Discover how to design and implement great input for your game in visionOS. Learn how system gestures let you provide frictionless ways for players to interact with your games. And explore best practices for supporting custom gestures and game controllers.