OCR app

Asked on 2024-07-30

1 search

For developing an OCR (Optical Character Recognition) app, you might find the session "Discover Swift enhancements in the Vision framework" particularly useful. This session covers various aspects of using the Vision framework for image analysis, including text recognition, which is a core component of OCR.

Here are some relevant points from the session:

-

Text Recognition: The Vision framework provides a

recognize text requestthat can be used to identify and understand text within images. This is essential for OCR applications. -

Optimizing Vision APIs with Swift Concurrency: For better performance, especially when processing multiple images, you can use Swift concurrency to handle image processing tasks concurrently.

-

Combining Requests: You can perform multiple requests together using an image request handler, which is useful if you need to scan for both barcodes and text in the same image.

-

New Vision API: The session also introduces new capabilities in the Vision framework, which might include enhancements that could be beneficial for OCR applications.

Relevant Sessions

If you need more specific details or timestamps, feel free to ask!

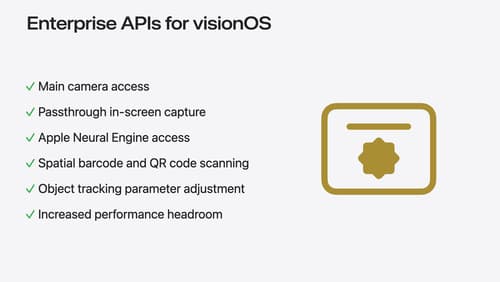

Introducing enterprise APIs for visionOS

Find out how you can use new enterprise APIs for visionOS to create spatial experiences that enhance employee and customer productivity on Apple Vision Pro.

Discover Swift enhancements in the Vision framework

The Vision Framework API has been redesigned to leverage modern Swift features like concurrency, making it easier and faster to integrate a wide array of Vision algorithms into your app. We’ll tour the updated API and share sample code, along with best practices, to help you get the benefits of this framework with less coding effort. We’ll also demonstrate two new features: image aesthetics and holistic body pose.

Discover area mode for Object Capture

Discover how area mode for Object Capture enables new 3D capture possibilities on iOS by extending the functionality of Object Capture to support capture and reconstruction of an area. Learn how to optimize the quality of iOS captures using the new macOS sample app for reconstruction, and find out how to view the final results with Quick Look on Apple Vision Pro, iPhone, iPad or Mac. Learn about improvements to 3D reconstruction, including a new API that allows you to create your own custom image processing pipelines.