instruments

Asked on 2024-08-13

6 searches

The content from the WWDC sessions you provided focuses on various aspects of audio processing and spatial computing, particularly with RealityKit audio and SwiftUI enhancements. Here are some key points:

-

RealityKit Audio:

- The session "Enhance your spatial computing app with RealityKit audio" discusses how to use audio in spatial computing applications. It covers topics like configuring spatial ambient and channel audio components, using custom audio units, and handling audio materials for collision sounds. The session also explains how to use audio mix groups and ambient audio for immersive experiences.

-

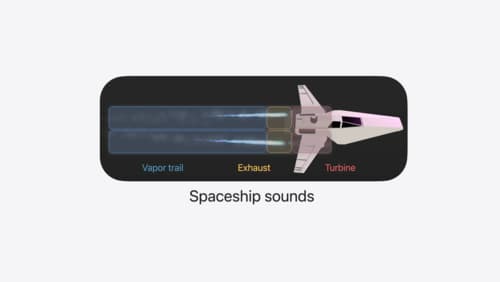

Spatial Web:

- The session "Optimize for the spatial web" highlights the use of audio nodes to create soundscapes, similar to guitar pedals or audio mixing booths. It introduces the concept of a pananode for spatializing audio and discusses the integration of WebXR for immersive virtual reality experiences.

-

SwiftUI Enhancements:

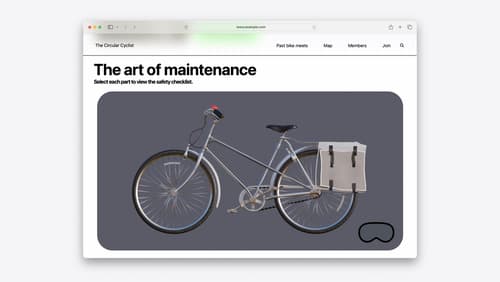

- The session "What’s new in SwiftUI" introduces new APIs for crafting experiences, including working with volumes and immersive spaces. It also covers new features for Apple Pencil and enhancements in UI animations and transitions.

-

Real-time ML Inference:

- The session "Support real-time ML inference on the CPU" discusses using SwiftUI for audio unit interfaces and applying effects like a bitcrusher model. It emphasizes the importance of real-time processing and memory efficiency in audio applications.

If you are interested in a specific topic or need a timestamp for a particular section, please let me know!

Optimize for the spatial web

Discover how to make the most of visionOS capabilities on the web. Explore recent updates like improvements to selection highlighting, and the ability to present spatial photos and panorama images in fullscreen. Learn to take advantage of existing web standards for dictation and text-to-speech with WebSpeech, spatial soundscapes with WebAudio, and immersive experiences with WebXR.

Enhance your spatial computing app with RealityKit audio

Elevate your spatial computing experience using RealityKit audio. Discover how spatial audio can make your 3D immersive experiences come to life. From ambient audio, reverb, to real-time procedural audio that can add character to your 3D content, learn how RealityKit audio APIs can help make your app more engaging.

What’s new in SwiftUI

Learn how you can use SwiftUI to build great apps for any Apple platform. Explore a fresh new look and feel for tabs and documents on iPadOS. Improve your window management with new windowing APIs, and gain more control over immersive spaces and volumes in your visionOS apps. We’ll also take you through other exciting refinements that help you make expressive charts, customize and layout text, and so much more.