How do I use ReferenceFileDocument?

Asked on 2024-08-15

1 search

To use ReferenceFileDocument, you would typically be working with object tracking in ARKit or visionOS. The process involves creating a reference object, which is a new file type introduced for object tracking. Here's a brief overview of how you can use it:

-

Create a 3D Model: First, you need a 3D model of the real-world object you want to track. This model should be in the USDZ format.

-

Train a Machine Learning Model: Use the CreateML app to train a machine learning model with your 3D asset. This process will generate a reference object.

-

Load the Reference Object: Once you have the reference object, you can load it at runtime using a file URL or from a bundle. This is done using the new API provided by ARKit.

-

Configure Object Tracking: After loading the reference object, configure a new object tracking provider with it and run it on an ARKit session.

-

Process Tracking Results: Once the data provider is running, you can start processing incoming tracking results, which are delivered as object anchors.

For more detailed steps on creating a reference object, you can refer to the session Explore object tracking for visionOS (05:07).

Relevant Sessions

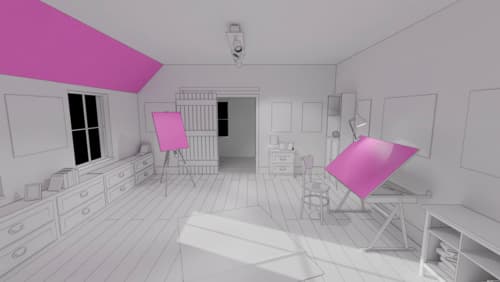

Discover area mode for Object Capture

Discover how area mode for Object Capture enables new 3D capture possibilities on iOS by extending the functionality of Object Capture to support capture and reconstruction of an area. Learn how to optimize the quality of iOS captures using the new macOS sample app for reconstruction, and find out how to view the final results with Quick Look on Apple Vision Pro, iPhone, iPad or Mac. Learn about improvements to 3D reconstruction, including a new API that allows you to create your own custom image processing pipelines.

Create enhanced spatial computing experiences with ARKit

Learn how to create captivating immersive experiences with ARKit’s latest features. Explore ways to use room tracking and object tracking to further engage with your surroundings. We’ll also share how your app can react to changes in your environment’s lighting on this platform. Discover improvements in hand tracking and plane detection which can make your spatial experiences more intuitive.

Use HDR for dynamic image experiences in your app

Discover how to read and write HDR images and process HDR content in your app. Explore the new supported HDR image formats and advanced methods for displaying HDR images. Find out how HDR content can coexist with your user interface — and what to watch out for when adding HDR image support to your app.