Realitykit gesture component

Asked on 2024-08-17

1 search

The RealityKit gesture component is discussed in several sessions at WWDC 2024, particularly focusing on how gestures can be used in visionOS applications. Here are some key points:

-

System Gestures: In the session Explore game input in visionOS, it is explained that system gestures like tap, double tap, pinch and hold, and pinch and drag can be used to interact with objects. These gestures can be combined to create seamless transitions between different actions.

-

Custom Gestures: The same session also covers the creation of custom gestures, emphasizing the importance of making them intuitive and providing feedback to the user. This allows for a more personalized interaction experience in games and applications.

-

RealityKit API: The session Discover RealityKit APIs for iOS, macOS and visionOS introduces new APIs for spatial tracking, which can be used to track hand movements and gestures more effectively. This is particularly useful for applications that require precise hand tracking, such as controlling a spaceship in a game.

-

Debugging Gestures: In the session Break into the RealityKit debugger, the RealityKit debugger is introduced, which can help developers troubleshoot and refine gesture interactions within their applications.

These sessions provide a comprehensive overview of how gestures can be implemented and optimized in visionOS using RealityKit. If you are interested in a specific aspect of gesture implementation, such as debugging or creating custom gestures, you can refer to the respective sessions for more detailed information.

Build a spatial drawing app with RealityKit

Harness the power of RealityKit through the process of building a spatial drawing app. As you create an eye-catching spatial experience that integrates RealityKit with ARKit and SwiftUI, you’ll explore how resources work in RealityKit and how to use features like low-level mesh and texture APIs to achieve fast updates of the users’ brush strokes.

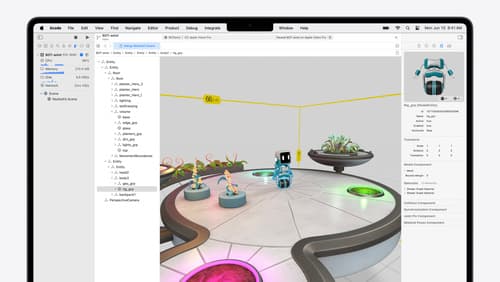

Break into the RealityKit debugger

Meet the RealityKit debugger and discover how this new tool lets you inspect the entity hierarchy of spatial apps, debug rogue transformations, find missing entities, and detect which parts of your code are causing problems for your systems.

Explore object tracking for visionOS

Find out how you can use object tracking to turn real-world objects into virtual anchors in your visionOS app. Learn how you can build spatial experiences with object tracking from start to finish. Find out how to create a reference object using machine learning in Create ML and attach content relative to your target object in Reality Composer Pro, RealityKit or ARKit APIs.