How to create a vstack?

Asked on 2024-08-18

1 search

It seems like you're asking about creating a VStack, which is a concept from SwiftUI, not directly related to the content from the WWDC sessions provided. However, I can guide you on how to create a VStack in SwiftUI, which is a common topic at Apple's WWDC.

In SwiftUI, a VStack is used to arrange views vertically. Here's a simple example:

import SwiftUI

struct ContentView: View {

var body: some View {

VStack {

Text("Hello, World!")

Text("Welcome to SwiftUI")

}

}

}

This code creates a vertical stack with two text views. You can customize the alignment, spacing, and other properties of the VStack to suit your needs.

If you have any questions about the specific sessions from WWDC, feel free to ask!

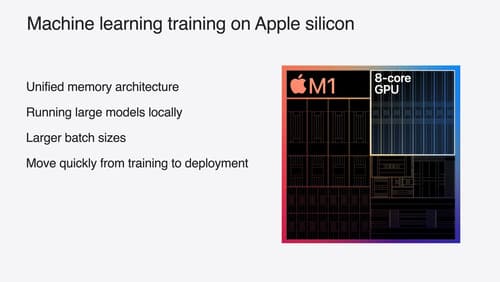

Train your machine learning and AI models on Apple GPUs

Learn how to train your models on Apple Silicon with Metal for PyTorch, JAX and TensorFlow. Take advantage of new attention operations and quantization support for improved transformer model performance on your devices.

Build a spatial drawing app with RealityKit

Harness the power of RealityKit through the process of building a spatial drawing app. As you create an eye-catching spatial experience that integrates RealityKit with ARKit and SwiftUI, you’ll explore how resources work in RealityKit and how to use features like low-level mesh and texture APIs to achieve fast updates of the users’ brush strokes.

Bring your machine learning and AI models to Apple silicon

Learn how to optimize your machine learning and AI models to leverage the power of Apple silicon. Review model conversion workflows to prepare your models for on-device deployment. Understand model compression techniques that are compatible with Apple silicon, and at what stages in your model deployment workflow you can apply them. We’ll also explore the tradeoffs between storage size, latency, power usage and accuracy.