How to work with AVAudio

Asked on 2024-08-19

1 search

To work with AVAudio, you can explore the session titled "Enhance your spatial computing app with RealityKit audio" from WWDC 2024. This session provides insights into using spatial audio sources with real-time generated audio, configuring audio components, and creating immersive audio experiences in apps.

For example, you can configure spatial ambient and channel audio components on entities to control the rendering of audio buffers, similar to audio file playback. The session also discusses using a custom audio unit with an Objective-C interface and real-time safe code in its render block, which can be used to drive oscillators and create dynamic audio experiences.

If you're interested in learning more about integrating audio into interactive 3D content, the session "Compose interactive 3D content in Reality Composer Pro" might be useful. It covers adding audio resources to entities and using audio library components for playback in code or timelines.

For a deeper dive into audio frameworks and technologies, the session "Port advanced games to Apple platforms" discusses the use of the Phase framework, which is a physical audio specialization engine that helps create rich, dynamic audio experiences.

Here are the relevant sessions mentioned:

- Enhance your spatial computing app with RealityKit audio (Spatial audio)

- Compose interactive 3D content in Reality Composer Pro (Introduction)

- Port advanced games to Apple platforms (Audio)

Enhance your spatial computing app with RealityKit audio

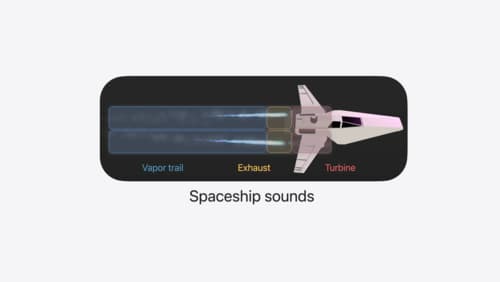

Elevate your spatial computing experience using RealityKit audio. Discover how spatial audio can make your 3D immersive experiences come to life. From ambient audio, reverb, to real-time procedural audio that can add character to your 3D content, learn how RealityKit audio APIs can help make your app more engaging.

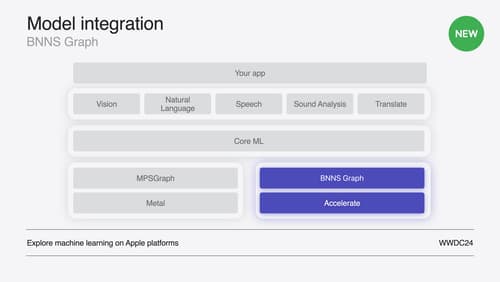

Support real-time ML inference on the CPU

Discover how you can use BNNSGraph to accelerate the execution of your machine learning model on the CPU. We will show you how to use BNNSGraph to compile and execute a machine learning model on the CPU and share how it provides real-time guarantees such as no runtime memory allocation and single-threaded running for audio or signal processing models.

Port advanced games to Apple platforms

Discover how simple it can be to reach players on Apple platforms worldwide. We’ll show you how to evaluate your Windows executable on Apple silicon, start your game port with code samples, convert your shader code to Metal, and bring your game to Mac, iPhone, and iPad. Explore enhanced Metal tools that understand HLSL shaders to validate, debug, and profile your ported shaders on Metal.