오브젝트 스캔

Asked on 2024-08-22

1 search

At WWDC 2024, Apple introduced several updates related to object scanning and capture. One of the key sessions was "Discover area mode for Object Capture," which introduced enhancements to the object capture technology on iOS and macOS. This session highlighted the new area mode, which allows for capturing uneven terrain outdoors and objects that cannot be fully circled. This is particularly useful for creating 3D models for use in artistic projects or 3D environments on Apple Vision Pro.

The session also discussed improvements in processing on Mac, including the introduction of a new sample app that simplifies creating various 3D model types. A new output type, quad mesh, was introduced, which facilitates easier editing and animation of 3D objects.

For more detailed information, you can refer to the session "Discover area mode for Object Capture" with the following chapter markers:

Additionally, the session "Explore object tracking for visionOS" discussed using object capture technology to create photorealistic 3D models for machine learning training in the CreateML app. This is essential for object tracking in visionOS, which can be used to anchor virtual content to real-world items.

For more on object tracking, you can check out the session "Explore object tracking for visionOS" with the following chapter markers:

Discover area mode for Object Capture

Discover how area mode for Object Capture enables new 3D capture possibilities on iOS by extending the functionality of Object Capture to support capture and reconstruction of an area. Learn how to optimize the quality of iOS captures using the new macOS sample app for reconstruction, and find out how to view the final results with Quick Look on Apple Vision Pro, iPhone, iPad or Mac. Learn about improvements to 3D reconstruction, including a new API that allows you to create your own custom image processing pipelines.

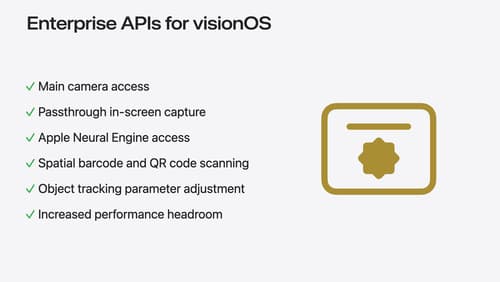

Introducing enterprise APIs for visionOS

Find out how you can use new enterprise APIs for visionOS to create spatial experiences that enhance employee and customer productivity on Apple Vision Pro.

Explore object tracking for visionOS

Find out how you can use object tracking to turn real-world objects into virtual anchors in your visionOS app. Learn how you can build spatial experiences with object tracking from start to finish. Find out how to create a reference object using machine learning in Create ML and attach content relative to your target object in Reality Composer Pro, RealityKit or ARKit APIs.