gesture

Asked on 2024-08-22

1 search

Apple's WWDC 2024 sessions provide a comprehensive look at how gestures can be utilized in visionOS and other platforms. Here are some key insights from the sessions:

-

System Gestures in visionOS: System gestures are a fundamental part of interacting with games and apps on visionOS. They include actions like tap, double tap, pinch and hold, and pinch and drag. These gestures are intuitive as they are consistent across different apps and games, making it easy for users to pick up and play without additional learning. For example, in the session "Explore game input in visionOS," it is highlighted how these gestures can be used to control game elements seamlessly (Explore game input in visionOS).

-

Combining Gestures: Developers can combine system gestures to create unique interactions. This can involve pairing gestures to detect simultaneous or sequential actions, and even combining them with keyboard modifiers for more complex inputs. This flexibility is particularly useful in games where players might need to manipulate multiple objects at once (Explore game input in visionOS).

-

Custom Gestures: While system gestures are powerful, there is also the option to create custom gestures. These should be intuitive and provide feedback to ensure users know they are performing them correctly. Custom gestures can be combined with system gestures to enhance the user experience, as demonstrated in the game "Blackbox" (Explore game input in visionOS).

-

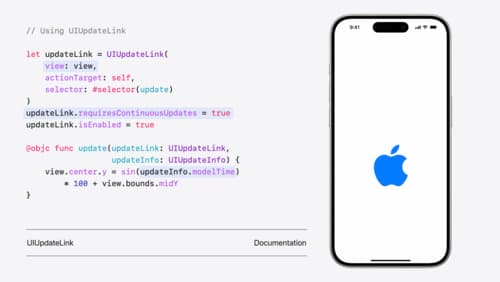

Gesture Coordination in UIKit and SwiftUI: In iOS 18, gesture systems in UIKit and SwiftUI have been unified, allowing for better coordination between gestures across these frameworks. This means developers can specify dependencies between gestures, ensuring smoother interactions (What’s new in UIKit).

These sessions provide valuable insights into how gestures can be effectively used and combined to create engaging and intuitive user experiences in visionOS and beyond.

Design great visionOS apps

Find out how to create compelling spatial computing apps by embracing immersion, designing for eyes and hands, and taking advantage of depth, scale, and space. We’ll share several examples of great visionOS apps and explore how their designers approached creating new experiences for the platform.

What’s new in UIKit

Explore everything new in UIKit, including tab and document launch experiences, transitions, and text and input changes. We’ll also discuss better-than-ever interoperability between UIKit and SwiftUI animations and gestures, as well as general improvements throughout UIKit.

Explore game input in visionOS

Discover how to design and implement great input for your game in visionOS. Learn how system gestures let you provide frictionless ways for players to interact with your games. And explore best practices for supporting custom gestures and game controllers.