Machine vision

Asked on 2024-08-24

1 search

Apple's WWDC 2024 introduced several enhancements in the Vision framework, focusing on making it easier to integrate computer vision into apps. The Vision framework now includes a streamlined syntax designed for Swift, full support for Swift concurrency, and new capabilities such as the ability to calculate image aesthetic scores. This feature assesses image quality based on factors like blur and exposure, assigning an overall score to the image.

Additionally, the Vision framework offers a variety of requests for common computer vision tasks, including image classification, text recognition, and object detection. It can detect and recognize objects like barcodes, people, and animals, and also supports body pose estimation in both 2D and 3D.

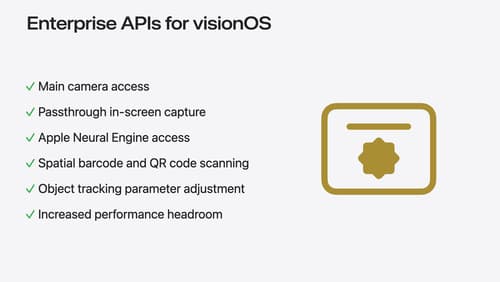

For enterprise applications, new APIs for visionOS were introduced, allowing enhanced sensor access and object tracking, which can be particularly useful in complex environments like repair or production lines.

For more detailed information, you can refer to the session "Discover Swift enhancements in the Vision framework" and "Introducing enterprise APIs for visionOS" from WWDC 2024.

Discover Swift enhancements in the Vision framework

The Vision Framework API has been redesigned to leverage modern Swift features like concurrency, making it easier and faster to integrate a wide array of Vision algorithms into your app. We’ll tour the updated API and share sample code, along with best practices, to help you get the benefits of this framework with less coding effort. We’ll also demonstrate two new features: image aesthetics and holistic body pose.

Introducing enterprise APIs for visionOS

Find out how you can use new enterprise APIs for visionOS to create spatial experiences that enhance employee and customer productivity on Apple Vision Pro.

What’s new in device management

Learn about the latest management capabilities for iOS, iPadOS, macOS, and visionOS, then discover the latest changes to Apple Business Manager and Apple School Manager. We’ll also share updates to Activation Lock, SoftwareUpdate, and Safari management.