bosh

Asked on 2024-08-28

1 search

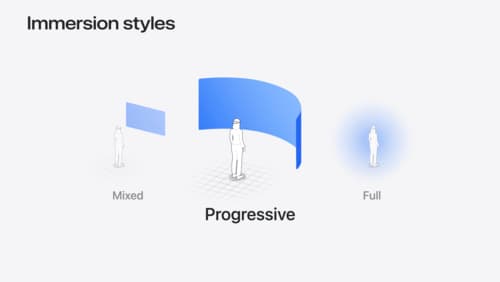

It seems like your question is off-topic. However, I can provide information about the content presented by Apple at WWDC. For example, during the session titled "Dive deep into volumes and immersive spaces," there was a demonstration of how to make a robot react to changes in immersion levels by moving outward or inward. This session also covered placing plants in an immersive environment and using the preferred surrounding effect API to enhance the experience.

If you have any specific questions about the sessions or need timestamps for particular topics, feel free to ask!

Dive deep into volumes and immersive spaces

Discover powerful new ways to customize volumes and immersive spaces in visionOS. Learn to fine-tune how volumes resize and respond to people moving around them. Make volumes and immersive spaces interact through the power of coordinate conversions. Find out how to make your app react when people adjust immersion with the Digital Crown, and use a surrounding effect to dynamically customize the passthrough tint in your immersive space experience.

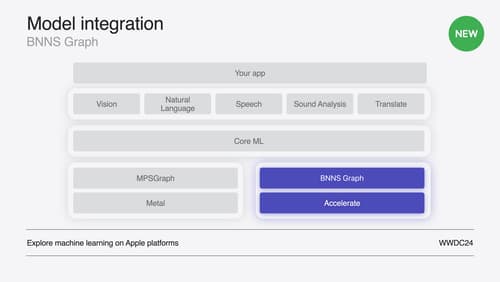

Support real-time ML inference on the CPU

Discover how you can use BNNSGraph to accelerate the execution of your machine learning model on the CPU. We will show you how to use BNNSGraph to compile and execute a machine learning model on the CPU and share how it provides real-time guarantees such as no runtime memory allocation and single-threaded running for audio or signal processing models.

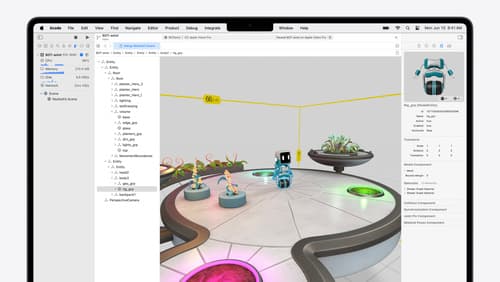

Break into the RealityKit debugger

Meet the RealityKit debugger and discover how this new tool lets you inspect the entity hierarchy of spatial apps, debug rogue transformations, find missing entities, and detect which parts of your code are causing problems for your systems.