How can I use pose estimation features.

Asked on 2024-08-29

1 search

To use pose estimation features, you can leverage the Vision framework's new Swift API, which supports concurrency and Swift 6. Specifically, you can create a detect human body pose request and set the request to detect hands to true. This will produce a human body pose observation with additional properties for the right and left hand observations. This holistic body pose feature allows you to detect hands and body together, enhancing the functionality of your applications.

For more detailed information, you can refer to the session Discover Swift enhancements in the Vision framework (15:42).

Explore machine learning on Apple platforms

Get started with an overview of machine learning frameworks on Apple platforms. Whether you’re implementing your first ML model, or an ML expert, we’ll offer guidance to help you select the right framework for your app’s needs.

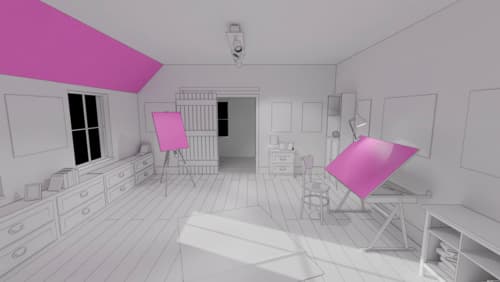

Compose interactive 3D content in Reality Composer Pro

Discover how the Timeline view in Reality Composer Pro can bring your 3D content to life. Learn how to create an animated story in which characters and objects interact with each other and the world around them using inverse kinematics, blend shapes, and skeletal poses. We’ll also show you how to use built-in and custom actions, sequence your actions, apply triggers, and implement natural movements.

Create enhanced spatial computing experiences with ARKit

Learn how to create captivating immersive experiences with ARKit’s latest features. Explore ways to use room tracking and object tracking to further engage with your surroundings. We’ll also share how your app can react to changes in your environment’s lighting on this platform. Discover improvements in hand tracking and plane detection which can make your spatial experiences more intuitive.