How do you create a mergable library?

Asked on 2024-09-04

1 search

To create a mergable library using Core ML models, you can utilize the new support for multifunction models introduced by Apple. Here's a brief overview of the process:

-

Convert Models: First, convert your models using

ct convertand save them as individual ML packages. -

Create a Multifunction Descriptor: Specify which models to merge and define the new function name in the merged model.

-

Merge Models: Use the

save multifunctionutilto produce a merged multifunction Core ML model. During this process, Core ML tools will deduplicate shared weights by calculating the hash values of weights. -

Load and Use the Model: When loading the multifunction model via the Core ML tools Python API, specify the function name to load the specific function and perform predictions as usual.

This approach allows you to merge models with a shared feature extractor, optimizing the use of resources and improving performance. For more detailed information, you can refer to the session Bring your machine learning and AI models to Apple silicon (26:31).

A Swift Tour: Explore Swift’s features and design

Learn the essential features and design philosophy of the Swift programming language. We’ll explore how to model data, handle errors, use protocols, write concurrent code, and more while building up a Swift package that has a library, an HTTP server, and a command line client. Whether you’re just beginning your Swift journey or have been with us from the start, this talk will help you get the most out of the language.

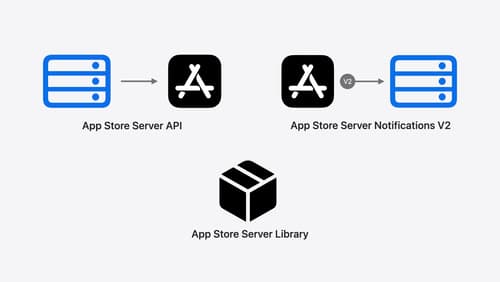

Explore App Store server APIs for In-App Purchase

Learn how to leverage your server to build great In-App Purchase experiences with the latest updates to the App Store Server API, App Store Server Notifications, and the open source App Store Server Library. After a recap of current APIs, we’ll introduce updated endpoint functionality, new transaction fields, and a new notification type. We’ll also discuss best practices for the purchase lifecycle, delivering content, and targeting offers, so you can become a server power user.

Demystify explicitly built modules

Explore how builds are changing in Xcode 16 with explicitly built modules. Discover how modules are used to build your code, how explicitly built modules improve transparency in compilation tasks, and how you can optimize your build by sharing modules across targets.