optimize image loading on swiftui

Asked on 2024-09-06

1 search

To optimize image loading in SwiftUI, you can consider several strategies based on the content presented at WWDC:

-

Use HDR for Dynamic Image Experiences: If you're working with HDR images, SwiftUI automatically handles tone mapping when using a

UIImageViewor a SwiftUI view. You can create a SwiftUI image view and specify the allowed dynamic range modifier to determine how much of the file's dynamic range should be displayed. This is a straightforward way to handle HDR images efficiently. For more control, consider using Core Image and Metal for interactive image changes. Use HDR for dynamic image experiences in your app. -

Concurrency for Image Processing: When processing multiple images, such as displaying them in a grid view, you can use Swift concurrency to optimize performance. This involves processing images in batches concurrently rather than one at a time. For example, you can crop images to focus on the main subject using a saliency image request. Discover Swift enhancements in the Vision framework.

-

Dynamic Layouts with SwiftUI: For images and icons, especially when dealing with dynamic type sizes, you can use the

scaledMetricAPI to ensure images resize appropriately based on the selected text size. This helps maintain a balance between text and image sizes, ensuring that essential content is prioritized. Get started with Dynamic Type.

These strategies can help you optimize image loading and display in SwiftUI, ensuring a smooth and efficient user experience.

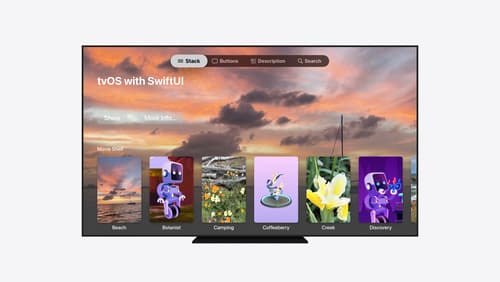

Migrate your TVML app to SwiftUI

SwiftUI helps you build great apps on all Apple platforms and is the preferred toolkit for bringing your content into the living room with tvOS 18. Learn how to use SwiftUI to create familiar layouts and controls from TVMLKit, and get tips and best practices.

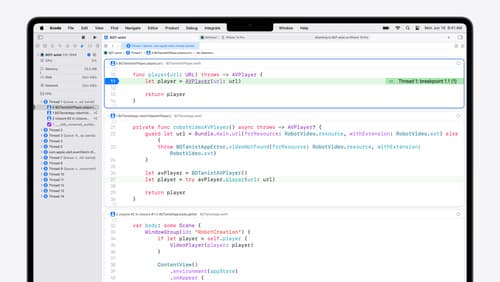

What’s new in Xcode 16

Discover the latest productivity and performance improvements in Xcode 16. Learn about enhancements to code completion, diagnostics, and Xcode Previews. Find out more about updates in builds and explore improvements in debugging and Instruments.

Discover Swift enhancements in the Vision framework

The Vision Framework API has been redesigned to leverage modern Swift features like concurrency, making it easier and faster to integrate a wide array of Vision algorithms into your app. We’ll tour the updated API and share sample code, along with best practices, to help you get the benefits of this framework with less coding effort. We’ll also demonstrate two new features: image aesthetics and holistic body pose.