State

Asked on 2024-09-08

1 search

The concept of "state" in machine learning models, as discussed in the WWDC sessions, refers to the ability of a model to retain information across different runs or inferences. This is particularly useful for models that need to maintain a history or context, such as language models that generate text based on previous inputs.

In the session Bring your machine learning and AI models to Apple silicon, it was highlighted that Core ML now supports stateful models, which automatically manage state tensors without needing to define them as inputs or outputs. This leads to better performance, as the model can update states in place.

Similarly, in the session Deploy machine learning and AI models on-device with Core ML, the use of state in models is explored to improve efficiency, particularly in language models. By using a key-value cache (KV cache) managed by Core ML states, the overhead is reduced, leading to faster prediction times.

These advancements in handling state within machine learning models on Apple silicon are designed to enhance performance and simplify the development process.

Deploy machine learning and AI models on-device with Core ML

Learn new ways to optimize speed and memory performance when you convert and run machine learning and AI models through Core ML. We’ll cover new options for model representations, performance insights, execution, and model stitching which can be used together to create compelling and private on-device experiences.

Explore wellbeing APIs in HealthKit

Learn how to incorporate mental health and wellbeing into your app using HealthKit. There are new APIs for State of Mind, as well as for Depression Risk and Anxiety Risk. We’ll dive into principles of emotion science to cover how reflecting on feelings can be beneficial, and how State of Mind can be used to represent different types of mood and emotion.

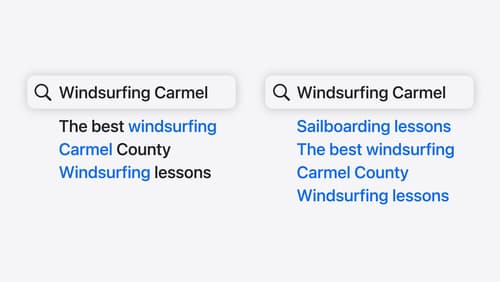

Support semantic search with Core Spotlight

Learn how to provide semantic search results in your app using Core Spotlight. Understand how to make your app’s content available in the user’s private, on-device index so people can search for items using natural language. We’ll also share how to optimize your app’s performance by scheduling indexing activities. To get the most out of this session, we recommend first checking out Core Spotlight documentation on the Apple Developer website.