corelocation

Asked on 2024-09-11

1 search

It seems like you're interested in CoreLocation, but the provided context doesn't include specific information about CoreLocation from the WWDC sessions. However, I can provide information on related topics such as machine learning, AI models, and memory management discussed at WWDC 2024.

For example, if you're interested in machine learning on Apple platforms, you might want to check out the session titled "Deploy machine learning and AI models on-device with Core ML," which covers new features in Core ML that help efficiently deploy and run machine learning models on devices. You can explore the session here.

If you have any specific questions about these topics or need information on a different aspect of WWDC, feel free to ask!

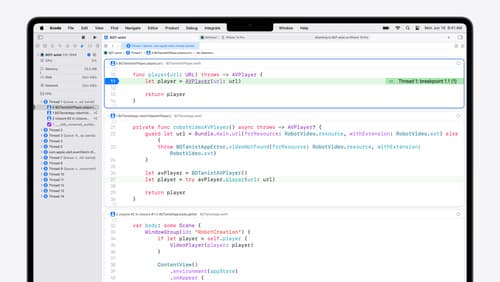

What’s new in Xcode 16

Discover the latest productivity and performance improvements in Xcode 16. Learn about enhancements to code completion, diagnostics, and Xcode Previews. Find out more about updates in builds and explore improvements in debugging and Instruments.

Analyze heap memory

Dive into the basis for your app’s dynamic memory: the heap! Explore how to use Instruments and Xcode to measure, analyze, and fix common heap issues. We’ll also cover some techniques and best practices for diagnosing transient growth, persistent growth, and leaks in your app.

Bring your machine learning and AI models to Apple silicon

Learn how to optimize your machine learning and AI models to leverage the power of Apple silicon. Review model conversion workflows to prepare your models for on-device deployment. Understand model compression techniques that are compatible with Apple silicon, and at what stages in your model deployment workflow you can apply them. We’ll also explore the tradeoffs between storage size, latency, power usage and accuracy.