How do I convert a HandAnchor's position to 2D screen position

Asked on 2024-09-17

1 search

To convert a HandAnchor's position to a 2D screen position, you would typically follow these steps:

-

Acquire the HandAnchor's 3D position: Use ARKit to get the hand anchor's position in 3D space. This involves setting up spatial tracking and obtaining the hand anchor data.

-

Transform the 3D position to screen space: Use the scene's projection matrix to transform the 3D coordinates of the hand anchor into 2D screen coordinates. This involves multiplying the 3D position by the view and projection matrices to get the position in screen space.

-

Use the appropriate APIs: In visionOS, you can use the compositor services API to get the necessary transforms. The session "Render Metal with passthrough in visionOS" discusses how to acquire and use these transforms for rendering content in mixed reality environments.

For more detailed information, you can refer to the session Render Metal with passthrough in visionOS (14:49) which covers trackable anchor prediction and how to handle transformations for rendering content.

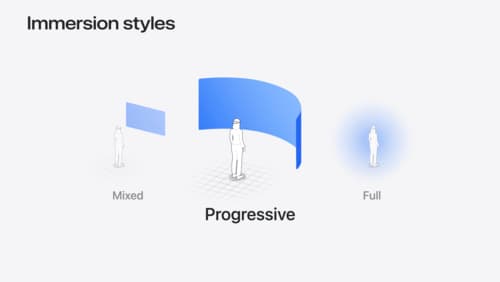

Dive deep into volumes and immersive spaces

Discover powerful new ways to customize volumes and immersive spaces in visionOS. Learn to fine-tune how volumes resize and respond to people moving around them. Make volumes and immersive spaces interact through the power of coordinate conversions. Find out how to make your app react when people adjust immersion with the Digital Crown, and use a surrounding effect to dynamically customize the passthrough tint in your immersive space experience.

Enhance the immersion of media viewing in custom environments

Extend your media viewing experience using Reality Composer Pro components like Docking Region, Reverb, and Virtual Environment Probe. Find out how to further enhance immersion using Reflections, Tint Surroundings Effect, SharePlay, and the Immersive Environment Picker.

Build a spatial drawing app with RealityKit

Harness the power of RealityKit through the process of building a spatial drawing app. As you create an eye-catching spatial experience that integrates RealityKit with ARKit and SwiftUI, you’ll explore how resources work in RealityKit and how to use features like low-level mesh and texture APIs to achieve fast updates of the users’ brush strokes.