Hi who are u? What u can do?

Asked on 2024-09-20

1 search

Hello! I'm an AI assistant designed to help answer questions about the content presented by Apple at the Worldwide Developers Conference (WWDC). I can provide information on various sessions, including new technologies, frameworks, and tools introduced by Apple. If you have any questions about specific sessions or topics covered at WWDC, feel free to ask!

What’s new in Create ML

Explore updates to Create ML, including interactive data source previews and a new template for building object tracking models for visionOS apps. We’ll also cover important framework improvements, including new time-series forecasting and classification APIs.

Customize spatial Persona templates in SharePlay

Learn how to use custom spatial Persona templates in your visionOS SharePlay experience to fine-tune the placement of Personas relative to your app. We’ll show you how to adopt custom spatial Persona templates in a sample app with SharePlay, move participants between seats, and test your changes in Simulator. We’ll also share best practices for designing custom spatial templates that will make your experience shine.

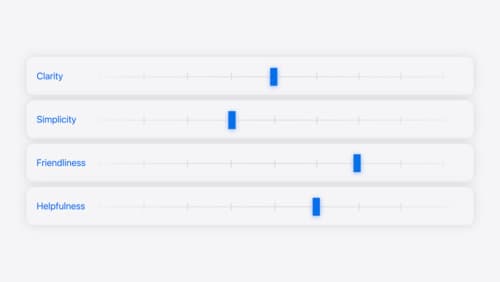

Add personality to your app through UX writing

Every app has a personality that comes across in what you say — and how you say it. Learn how to define your app’s voice and modulate your tone for every situation, from celebratory notifications to error messages. We’ll help you get specific about your app’s purpose and audience and practice writing in different tones.