how do I started document based project?

Asked on 2024-10-03

1 search

To get started with a document-based project, you can follow the guidance from the session titled "Evolve your document launch experience" from WWDC 2024. Here are the steps you can take:

-

Recompile with iOS 18 SDK: Begin by recompiling your SwiftUI apps with the iOS 18 SDK. This will allow you to adopt the new customizable launch experience.

-

Document Group Launch Scene: Add a document group launch scene to customize the launch experience and showcase your app's unique identity.

-

UIKit Apps: For UIKit apps, make the

UIDocumentViewControllerthe root view controller and apply your customizations to the launch options. -

Create Document Action: In your view controller subclass, assign the launch options' primary or secondary action to be a create document action. This involves handling requests for new documents and assigning the delegate of the launch options browser view controller.

-

Template Picker: Build a template picker with a custom design that suits your app best. This allows users to create documents from predefined templates.

For more detailed guidance, you can refer to the session Evolve your document launch experience (08:31) which covers these steps in detail.

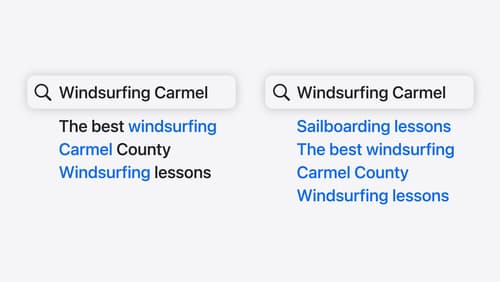

Support semantic search with Core Spotlight

Learn how to provide semantic search results in your app using Core Spotlight. Understand how to make your app’s content available in the user’s private, on-device index so people can search for items using natural language. We’ll also share how to optimize your app’s performance by scheduling indexing activities. To get the most out of this session, we recommend first checking out Core Spotlight documentation on the Apple Developer website.

Discover area mode for Object Capture

Discover how area mode for Object Capture enables new 3D capture possibilities on iOS by extending the functionality of Object Capture to support capture and reconstruction of an area. Learn how to optimize the quality of iOS captures using the new macOS sample app for reconstruction, and find out how to view the final results with Quick Look on Apple Vision Pro, iPhone, iPad or Mac. Learn about improvements to 3D reconstruction, including a new API that allows you to create your own custom image processing pipelines.

Explore object tracking for visionOS

Find out how you can use object tracking to turn real-world objects into virtual anchors in your visionOS app. Learn how you can build spatial experiences with object tracking from start to finish. Find out how to create a reference object using machine learning in Create ML and attach content relative to your target object in Reality Composer Pro, RealityKit or ARKit APIs.