ыефеу зкўзукен цкфззук сўьішту

Asked on 2024-10-24

1 search

It seems like your question is off-topic. However, I can provide information about the content presented by Apple at WWDC. For example, at WWDC 2024, there were sessions on customizing spatial Persona templates in SharePlay, enhancing media viewing in custom environments, and using the RealityKit debugger. If you have any specific questions about these topics or other sessions from WWDC, feel free to ask!

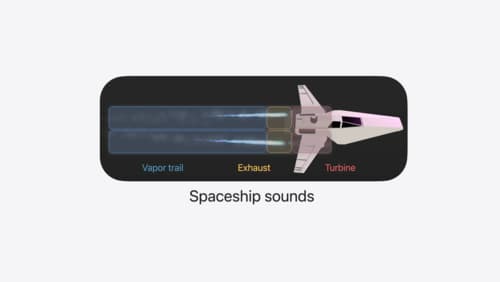

Enhance your spatial computing app with RealityKit audio

Elevate your spatial computing experience using RealityKit audio. Discover how spatial audio can make your 3D immersive experiences come to life. From ambient audio, reverb, to real-time procedural audio that can add character to your 3D content, learn how RealityKit audio APIs can help make your app more engaging.

Enhance the immersion of media viewing in custom environments

Extend your media viewing experience using Reality Composer Pro components like Docking Region, Reverb, and Virtual Environment Probe. Find out how to further enhance immersion using Reflections, Tint Surroundings Effect, SharePlay, and the Immersive Environment Picker.

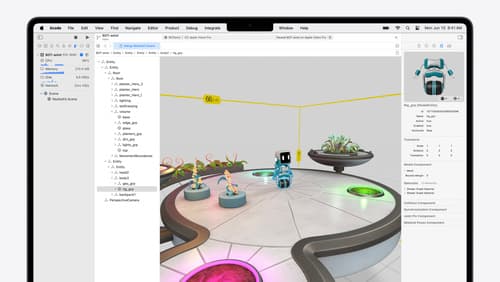

Break into the RealityKit debugger

Meet the RealityKit debugger and discover how this new tool lets you inspect the entity hierarchy of spatial apps, debug rogue transformations, find missing entities, and detect which parts of your code are causing problems for your systems.