How do I use ARKit in unreal engine?

Asked on 2024-11-14

1 search

To use ARKit in Unreal Engine, you can leverage the ARKit APIs to create immersive experiences. While the context provided does not specifically cover Unreal Engine, it does mention using ARKit with other tools like RealityKit and Reality Composer Pro to create spatial computing experiences on visionOS.

For example, in the session titled "Render Metal with passthrough in visionOS," ARKit is used to access world and hand tracking, which can be integrated into applications to provide mixed immersive experiences. Similarly, the session "Explore object tracking for visionOS" discusses using ARKit APIs for object tracking, which can be useful for creating interactive visualizations.

If you are interested in learning more about ARKit and its capabilities, you might want to explore the session "Create enhanced spatial computing experiences with ARKit," which covers various features like room tracking, plane detection, and hand tracking.

For more specific guidance on using ARKit with Unreal Engine, you might need to refer to Unreal Engine's documentation or community resources, as the context provided here focuses on Apple's tools and platforms.

Create enhanced spatial computing experiences with ARKit

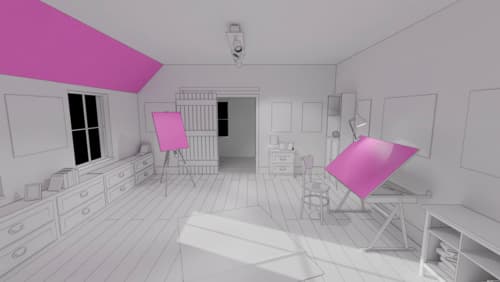

Learn how to create captivating immersive experiences with ARKit’s latest features. Explore ways to use room tracking and object tracking to further engage with your surroundings. We’ll also share how your app can react to changes in your environment’s lighting on this platform. Discover improvements in hand tracking and plane detection which can make your spatial experiences more intuitive.

What’s new in USD and MaterialX

Explore updates to Universal Scene Description and MaterialX support on Apple platforms. Discover how these technologies provide a foundation for 3D content creation and delivery, and learn how they can help streamline your workflows for creating great spatial experiences. Learn about USD and MaterialX support in RealityKit and Storm, advancements in our system-provided tooling, and more.

Bring your iOS or iPadOS game to visionOS

Discover how to transform your iOS or iPadOS game into a uniquely visionOS experience. Increase the immersion (and fun factor!) with a 3D frame or an immersive background. And invite players further into your world by adding depth to the window with stereoscopy or head tracking.