How do I load USDZ file into RealityKit scene?

Asked on 2025-01-25

1 search

To load a USDZ file into a RealityKit scene, you can use the RealityKit API, which supports loading USD files. Here's a general approach:

-

Import the USDZ file: You can import your USDZ file into your RealityKit project. This can be done by dragging and dropping the USDZ file into your Xcode project.

-

Load the USDZ file in RealityKit: Use the

Entity.loadModel(named:)method to load the USDZ file into your RealityKit scene. This method asynchronously loads the model and returns anEntitythat you can add to your scene. -

Add the model to your scene: Once the model is loaded, you can add it to your RealityKit scene by attaching it to an

AnchorEntityor directly to the scene's root entity.

Here's a simple code example:

import RealityKit

// Load the USDZ model

let modelEntity = try! Entity.loadModel(named: "YourModelName")

// Create an anchor entity

let anchorEntity = AnchorEntity()

// Add the model entity to the anchor

anchorEntity.addChild(modelEntity)

// Add the anchor to the scene

arView.scene.addAnchor(anchorEntity)

For more detailed information, you might want to check out the session titled "What’s new in USD and MaterialX" from WWDC 2024, which discusses the support for USD in RealityKit. You can find more about this in the What’s new in USD and MaterialX (02:31) session.

Optimize for the spatial web

Discover how to make the most of visionOS capabilities on the web. Explore recent updates like improvements to selection highlighting, and the ability to present spatial photos and panorama images in fullscreen. Learn to take advantage of existing web standards for dictation and text-to-speech with WebSpeech, spatial soundscapes with WebAudio, and immersive experiences with WebXR.

Discover RealityKit APIs for iOS, macOS and visionOS

Learn how new cross-platform APIs in RealityKit can help you build immersive apps for iOS, macOS, and visionOS. Check out the new hover effects, lights and shadows, and portal crossing features, and view them in action through real examples.

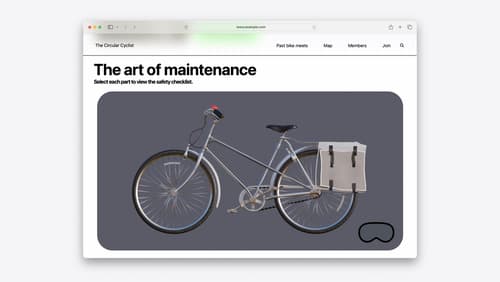

Explore object tracking for visionOS

Find out how you can use object tracking to turn real-world objects into virtual anchors in your visionOS app. Learn how you can build spatial experiences with object tracking from start to finish. Find out how to create a reference object using machine learning in Create ML and attach content relative to your target object in Reality Composer Pro, RealityKit or ARKit APIs.