wats new in vision

Asked on 2025-01-30

1 search

At WWDC 2024, Apple introduced several enhancements to the Vision framework, particularly focusing on Swift integration. Here are the key updates:

-

New Swift API: The Vision framework now includes a new API with streamlined syntax designed for Swift, making it easier to integrate computer vision capabilities into apps. This includes full support for Swift concurrency and Swift 6, allowing developers to write more performant applications.

-

Async/Await Syntax: The new API replaces completion handlers with async/await syntax, simplifying the process of handling asynchronous operations.

-

Enhanced Capabilities: Vision can detect faces, recognize text in 18 languages, track body poses, and even track hand poses for new interaction methods. These capabilities are now more accessible with the new Swift API.

-

Updating Existing Apps: Developers can update their existing Vision applications by adopting new request and observation types, which involve removing the "VN" prefix from Vision type names.

For more detailed information, you can refer to the session Discover Swift enhancements in the Vision framework (01:09).

Discover Swift enhancements in the Vision framework

The Vision Framework API has been redesigned to leverage modern Swift features like concurrency, making it easier and faster to integrate a wide array of Vision algorithms into your app. We’ll tour the updated API and share sample code, along with best practices, to help you get the benefits of this framework with less coding effort. We’ll also demonstrate two new features: image aesthetics and holistic body pose.

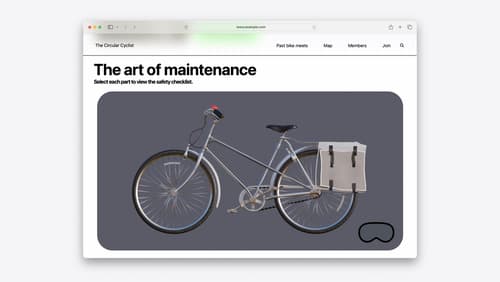

Optimize for the spatial web

Discover how to make the most of visionOS capabilities on the web. Explore recent updates like improvements to selection highlighting, and the ability to present spatial photos and panorama images in fullscreen. Learn to take advantage of existing web standards for dictation and text-to-speech with WebSpeech, spatial soundscapes with WebAudio, and immersive experiences with WebXR.

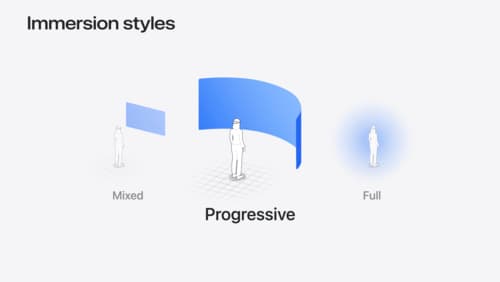

Dive deep into volumes and immersive spaces

Discover powerful new ways to customize volumes and immersive spaces in visionOS. Learn to fine-tune how volumes resize and respond to people moving around them. Make volumes and immersive spaces interact through the power of coordinate conversions. Find out how to make your app react when people adjust immersion with the Digital Crown, and use a surrounding effect to dynamically customize the passthrough tint in your immersive space experience.