what are some of the latest ml features?

Asked on 2025-02-10

1 search

Apple introduced several new machine learning features at WWDC 2024, focusing on enhancing performance and integration across their platforms. Here are some of the highlights:

-

Core ML Enhancements: Core ML now supports new features to help run state-of-the-art generative AI models on devices. This includes the introduction of the ML tensor type, which simplifies computational tasks, and the ability to manage key-value caches for efficient decoding of large language models. Additionally, multifunction models can now be deployed to handle multiple functionalities efficiently (Deploy machine learning and AI models on-device with Core ML).

-

Transformer Model Optimization: There are new features aimed at improving the performance of transformer models, which are widely used in language processing tasks. These improvements include enhanced compute performance, memory bandwidth savings, and quality improvements (Accelerate machine learning with Metal).

-

CreateML Updates: The CreateML app now includes an object tracking template for training reference objects to anchor spatial experiences on visionOS. It also offers new time series classification and forecasting components (Explore machine learning on Apple platforms).

-

Quantization and Performance Tools: Core ML has introduced four-bit quantization and stateful KV cache techniques, which significantly boost model execution speed and memory efficiency. These tools are particularly useful for running large models like the Mistral 7B parameter model (Platforms State of the Union).

These updates are designed to make it easier for developers to integrate and optimize machine learning models on Apple devices, leveraging the full power of Apple Silicon and the latest software tools.

What’s new in Create ML

Explore updates to Create ML, including interactive data source previews and a new template for building object tracking models for visionOS apps. We’ll also cover important framework improvements, including new time-series forecasting and classification APIs.

Deploy machine learning and AI models on-device with Core ML

Learn new ways to optimize speed and memory performance when you convert and run machine learning and AI models through Core ML. We’ll cover new options for model representations, performance insights, execution, and model stitching which can be used together to create compelling and private on-device experiences.

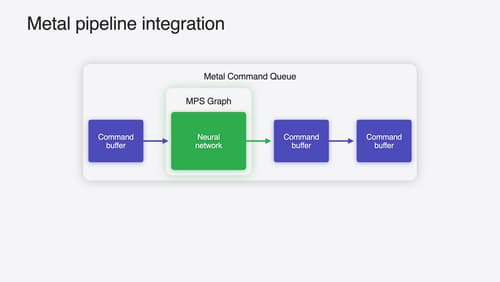

Accelerate machine learning with Metal

Learn how to accelerate your machine learning transformer models with new features in Metal Performance Shaders Graph. We’ll also cover how to improve your model’s compute bandwidth and quality, and visualize it in the all new MPSGraph viewer.