image io

Asked on 2025-03-05

3 searches

The session "Use HDR for dynamic image experiences in your app" at WWDC 2024 covers various aspects of working with HDR images, including the use of the Image IO API. This session discusses how to handle HDR images, including reading, editing, displaying, and writing them. It introduces new APIs and options for working with adaptive HDR files, such as the expand HDR option for CI images and the KCG image source decode to HDR option in Image IO.

For more detailed information on using Image IO with HDR images, you can refer to the session chapter on Read HDR images.

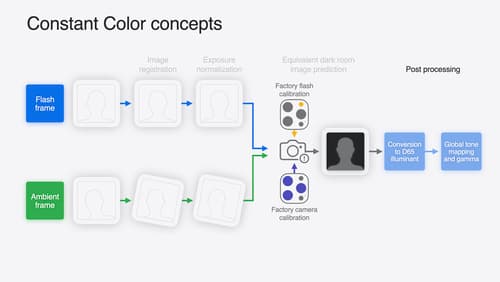

Keep colors consistent across captures

Meet the Constant Color API and find out how it can help people use your app to determine precise colors. You’ll learn how to adopt the API, explore its scientific and marketing potential, and discover best practices for making the most of the technology.

What’s new in AppKit

Discover the latest advances in Mac app development. Get an overview of the new features in macOS Sequoia, and how to adopt them in your app. Explore new ways to integrate your existing code with SwiftUI. Learn about the improvements made to numerous AppKit controls, like toolbars, menus, text input, and more.

Use HDR for dynamic image experiences in your app

Discover how to read and write HDR images and process HDR content in your app. Explore the new supported HDR image formats and advanced methods for displaying HDR images. Find out how HDR content can coexist with your user interface — and what to watch out for when adding HDR image support to your app.