image IO 图像处理

Asked on 2025-03-05

1 search

It seems you're interested in image processing, specifically related to Image IO and HDR. At WWDC 2024, Apple presented a session titled "Use HDR for dynamic image experiences in your app," which covers various aspects of handling HDR images, including reading, editing, displaying, and saving them.

In this session, they discussed how to use Image IO to save an SDR CG image and gain map data. The process involves calling cgimagedestination addimage with an SDR CG image and creating a dictionary to describe the gain map. This is part of a broader discussion on how to handle adaptive HDR files, which can be loaded as either SDR or HDR images depending on the desired fidelity and compatibility.

For more detailed information, you can refer to the session Use HDR for dynamic image experiences in your app (18:24) where they discuss reading HDR images, and Use HDR for dynamic image experiences in your app (31:37) for saving images.

Bring expression to your app with Genmoji

Discover how to bring Genmoji to life in your app. We’ll go over how to render, store, and communicate text that includes Genmoji. If your app features a custom text engine, we’ll also cover techniques for adding support for Genmoji.

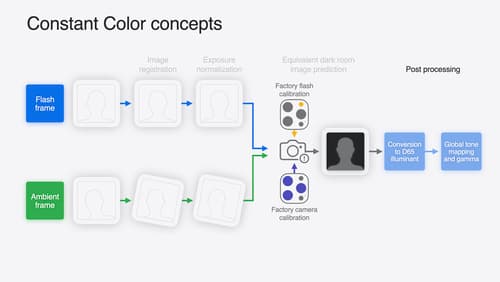

Keep colors consistent across captures

Meet the Constant Color API and find out how it can help people use your app to determine precise colors. You’ll learn how to adopt the API, explore its scientific and marketing potential, and discover best practices for making the most of the technology.

Use HDR for dynamic image experiences in your app

Discover how to read and write HDR images and process HDR content in your app. Explore the new supported HDR image formats and advanced methods for displaying HDR images. Find out how HDR content can coexist with your user interface — and what to watch out for when adding HDR image support to your app.