如何利用Core ML,识别一段航班行程文本中,提取出航班号,出发地,到达地,日期等转成json,例如“乘坐ZH1998 深圳保安T3-北京首都T3 当地时间07:55-10:55”

Asked on 2025-03-07

1 search

To extract information such as flight number, departure location, arrival location, and date from a text like "乘坐ZH1998 深圳保安T3-北京首都T3 当地时间07:55-10:55" and convert it into JSON using Core ML, you can follow these general steps:

-

Model Selection and Training: Use Create ML to train a custom natural language processing (NLP) model. You can start by preparing a dataset with labeled examples of flight information. Create ML allows you to customize models with your own data, which is ideal for this task.

-

Model Deployment: Once trained, the model can be exported in Core ML format and integrated into your iOS app. Core ML optimizes the model for execution on Apple devices, leveraging CPU, GPU, and the neural engine for efficient performance.

-

Text Processing: Use the Vision framework for text extraction if the input is an image. For text input, you can directly process it using the NLP model to identify and extract relevant entities like flight number, departure, and arrival locations.

-

JSON Conversion: After extracting the necessary information, you can format it into a JSON structure. For example:

{ "flight_number": "ZH1998", "departure": "深圳保安T3", "arrival": "北京首都T3", "departure_time": "07:55", "arrival_time": "10:55" }

For more detailed guidance on using Core ML and Create ML, you can refer to the session Explore machine learning on Apple platforms (02:05) which covers deploying models on Apple devices and customizing them with your data.

Bring your machine learning and AI models to Apple silicon

Learn how to optimize your machine learning and AI models to leverage the power of Apple silicon. Review model conversion workflows to prepare your models for on-device deployment. Understand model compression techniques that are compatible with Apple silicon, and at what stages in your model deployment workflow you can apply them. We’ll also explore the tradeoffs between storage size, latency, power usage and accuracy.

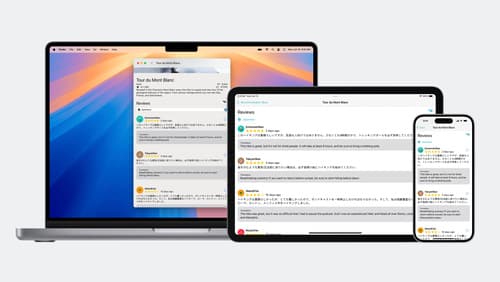

Meet the Translation API

Discover how you can translate text across different languages in your app using the new Translation framework. We’ll show you how to quickly display translations in the system UI, and how to translate larger batches of text for your app’s UI.

What’s new in Create ML

Explore updates to Create ML, including interactive data source previews and a new template for building object tracking models for visionOS apps. We’ll also cover important framework improvements, including new time-series forecasting and classification APIs.