How do I create an arobject

Asked on 2025-03-10

1 search

To create an AR object, you can follow these steps based on the information from the WWDC sessions:

-

3D Model Creation: First, you need a 3D model of the object you want to use. This model should be in the USDZ format, which is suitable for AR applications. You can create this model using tools like Reality Composer or find models online.

-

Reference Object Creation: Use the CreateML app to generate a reference object from your 3D model. This involves training a machine learning model to recognize the object. The CreateML app provides a spatial object tracking template to facilitate this process. This step is crucial for enabling object tracking in AR applications.

-

Object Tracking: Once you have your reference object, you can use ARKit to track it. Load the reference object into your ARKit session and configure an object tracking provider. This allows you to anchor virtual content to the real-world object.

For more detailed guidance, you can refer to the session Explore object tracking for visionOS (05:07) which covers creating a reference object, and Create enhanced spatial computing experiences with ARKit (06:46) which discusses object tracking.

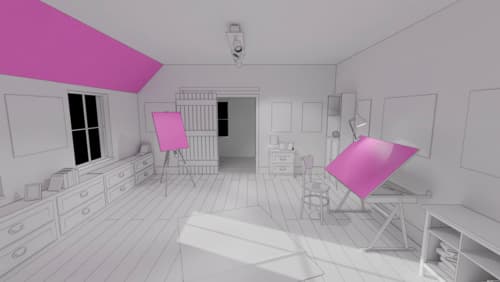

Render Metal with passthrough in visionOS

Get ready to extend your Metal experiences for visionOS. Learn best practices for integrating your rendered content with people’s physical environments with passthrough. Find out how to position rendered content to match the physical world, reduce latency with trackable anchor prediction, and more.

What’s new in Create ML

Explore updates to Create ML, including interactive data source previews and a new template for building object tracking models for visionOS apps. We’ll also cover important framework improvements, including new time-series forecasting and classification APIs.

Create enhanced spatial computing experiences with ARKit

Learn how to create captivating immersive experiences with ARKit’s latest features. Explore ways to use room tracking and object tracking to further engage with your surroundings. We’ll also share how your app can react to changes in your environment’s lighting on this platform. Discover improvements in hand tracking and plane detection which can make your spatial experiences more intuitive.