How i use mstral on device?

Asked on 2025-03-12

1 search

To use Mistral on an Apple device, you can follow the workflow for deploying machine learning models on Apple platforms. Here's a brief overview of the process:

-

Model Architecture and Training: Start by defining the model architecture and training the model with the appropriate data. You can use training libraries such as PyTorch or TensorFlow, taking advantage of Apple silicon and the unified memory architecture on Mac.

-

Convert to Core ML Format: Once the model is trained, convert it into the Core ML format for deployment. This step involves optimizing the model representation and parameters to ensure great performance while maintaining accuracy.

-

Integration with Apple Frameworks: Finally, write code to integrate the model with Apple frameworks to load and execute it on the device. This involves using Core ML to efficiently deploy and run your machine learning models on-device.

For more detailed information, you can refer to the session Explore machine learning on Apple platforms (07:16) which covers running models on device, including Mistral.

Explore object tracking for visionOS

Find out how you can use object tracking to turn real-world objects into virtual anchors in your visionOS app. Learn how you can build spatial experiences with object tracking from start to finish. Find out how to create a reference object using machine learning in Create ML and attach content relative to your target object in Reality Composer Pro, RealityKit or ARKit APIs.

Go small with Embedded Swift

Embedded Swift brings the safety and expressivity of Swift to constrained environments. Explore how Embedded Swift runs on a variety of microcontrollers through a demonstration using an off-the-shelf Matter device. Learn how the Embedded Swift subset packs the benefits of Swift into a tiny footprint with no runtime, and discover plenty of resources to start your own Embedded Swift adventure.

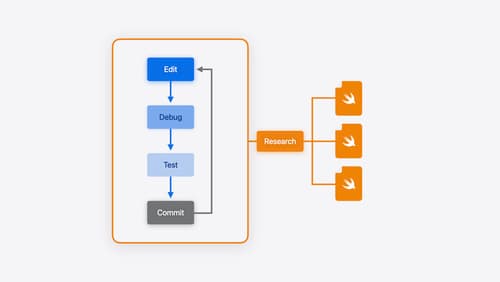

Xcode essentials

Edit, debug, commit, repeat. Explore the suite of tools in Xcode that help you iterate quickly when developing apps. Discover tips and tricks to help optimize and boost your development workflow.