How can I create macOS app from realityKit project?

Asked on 2025-03-27

1 search

To create a macOS app from a RealityKit project, you can leverage the cross-platform capabilities of RealityKit, which allows you to build apps for iOS, macOS, and visionOS with minimal changes to your code. Here are some steps and resources that might help you in this process:

-

Cross-Platform Capabilities: RealityKit provides feature parity across platforms, making it easy to run your app on macOS, iOS, and visionOS. This means you can develop your app with RealityKit and deploy it across these platforms with minimal adjustments. You can learn more about these capabilities in the session Discover RealityKit APIs for iOS, macOS and visionOS.

-

RealityKit Framework: RealityKit is a powerful framework that provides high-performance 3D simulation and rendering capabilities. It simplifies the process of rendering 3D models and supports a variety of styles, making it suitable for creating immersive spatial computing apps and games. You can explore more about RealityKit's features in the session Platforms State of the Union.

-

Sample Projects and Debugging: You can use sample projects provided in the sessions to understand how to structure your app. Additionally, the RealityKit debugger can help you troubleshoot and optimize your app. For more on debugging, see the session Break into the RealityKit debugger.

By following these resources and utilizing the cross-platform features of RealityKit, you can effectively create a macOS app from your RealityKit project.

Optimize your 3D assets for spatial computing

Dive into an end-to-end workflow for optimized 3D asset creation. Discover best practices for optimizing meshes, materials, and textures in your digital content creation tool. Learn how to harness shader graph, baking, and material instances to enhance your 3D scene while optimizing performance. Take advantage of native tools to work more effectively with your assets and improve your app’s performance.

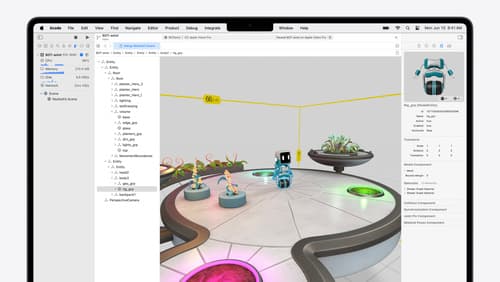

Break into the RealityKit debugger

Meet the RealityKit debugger and discover how this new tool lets you inspect the entity hierarchy of spatial apps, debug rogue transformations, find missing entities, and detect which parts of your code are causing problems for your systems.

Build a spatial drawing app with RealityKit

Harness the power of RealityKit through the process of building a spatial drawing app. As you create an eye-catching spatial experience that integrates RealityKit with ARKit and SwiftUI, you’ll explore how resources work in RealityKit and how to use features like low-level mesh and texture APIs to achieve fast updates of the users’ brush strokes.