how do I make two devices map to the same ARKit anchor points

Asked on 2024-07-30

1 search

To make two devices map to the same ARKit anchor points, you can use the ARKit's world tracking and anchor capabilities. Here are the steps you can follow:

-

World Tracking: Ensure both devices are using ARKit's world tracking to understand the environment. This is foundational for mapping anchor points accurately.

-

Anchor Setup: Use ARKit to set up anchors in the environment. Anchors represent a position and orientation in three-dimensional space.

-

Plane Detection: Utilize plane detection to identify surfaces in the environment. This can help in placing virtual content consistently across devices.

-

Object Tracking: If you are tracking specific objects, ARKit can now track real-world objects that are statically placed in your environment. This can help in ensuring both devices recognize the same objects and their positions.

-

Spatial Tracking Session: Use RealityKit's spatial tracking session to access and manage anchor entity transforms. This can help in synchronizing the anchor points across devices.

By following these steps, you can ensure that both devices map to the same ARKit anchor points, providing a consistent augmented reality experience across multiple devices.

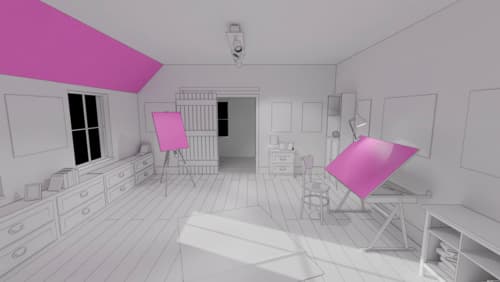

Create enhanced spatial computing experiences with ARKit

Learn how to create captivating immersive experiences with ARKit’s latest features. Explore ways to use room tracking and object tracking to further engage with your surroundings. We’ll also share how your app can react to changes in your environment’s lighting on this platform. Discover improvements in hand tracking and plane detection which can make your spatial experiences more intuitive.

Explore object tracking for visionOS

Find out how you can use object tracking to turn real-world objects into virtual anchors in your visionOS app. Learn how you can build spatial experiences with object tracking from start to finish. Find out how to create a reference object using machine learning in Create ML and attach content relative to your target object in Reality Composer Pro, RealityKit or ARKit APIs.

Render Metal with passthrough in visionOS

Get ready to extend your Metal experiences for visionOS. Learn best practices for integrating your rendered content with people’s physical environments with passthrough. Find out how to position rendered content to match the physical world, reduce latency with trackable anchor prediction, and more.