VisionOS new features

Asked on 2024-07-30

1 search

Apple introduced several new features for VisionOS at WWDC 2024. Here are some of the highlights:

-

Scene Understanding Enhancements:

- Planes Detection: Now supports all orientations, allowing for anchoring objects on various surfaces.

- Room Anchors: These consider the user's surroundings on a per-room basis and can detect movement across rooms.

- Object Tracking API: This new API allows attaching content to individual objects around the user, enabling new dimensions of interactivity. For example, you can attach virtual instructions to physical objects.

-

Hand Interaction:

- Developers can now decide if the user's hands appear in front of or behind the content, providing more creative control over app experiences.

-

HealthKit Integration:

- VisionOS supports immersive spaces where users can reflect on their activities and save their experiences to HealthKit. This feature includes a "Guest User" mode, allowing others to try out Apple Vision Pro while preserving the owner's data and privacy.

-

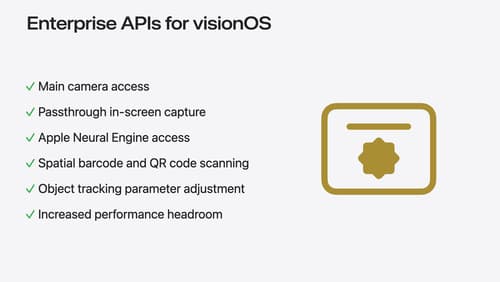

Enterprise APIs:

- Enhanced known object tracking through parameter adjustment, allowing for tuning and optimizing object tracking to suit specific use cases.

-

ARKit Updates:

- Room Tracking: Customizes experiences based on the room.

- Plane Detection: Improved to support more complex environments.

- Hand Tracking: Used in apps like Blackbox and Super Fruit Ninja for interactive experiences.

For more detailed information, you can refer to the following sessions:

- Platforms State of the Union

- Get started with HealthKit in visionOS

- Introducing enterprise APIs for visionOS

- Create enhanced spatial computing experiences with ARKit

Relevant Sessions

- Platforms State of the Union

- Get started with HealthKit in visionOS

- Introducing enterprise APIs for visionOS

- Create enhanced spatial computing experiences with ARKit

Introducing enterprise APIs for visionOS

Find out how you can use new enterprise APIs for visionOS to create spatial experiences that enhance employee and customer productivity on Apple Vision Pro.

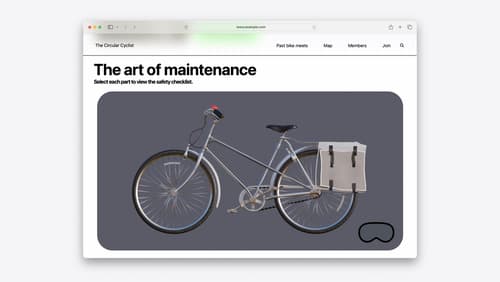

Optimize for the spatial web

Discover how to make the most of visionOS capabilities on the web. Explore recent updates like improvements to selection highlighting, and the ability to present spatial photos and panorama images in fullscreen. Learn to take advantage of existing web standards for dictation and text-to-speech with WebSpeech, spatial soundscapes with WebAudio, and immersive experiences with WebXR.

Design great visionOS apps

Find out how to create compelling spatial computing apps by embracing immersion, designing for eyes and hands, and taking advantage of depth, scale, and space. We’ll share several examples of great visionOS apps and explore how their designers approached creating new experiences for the platform.