How to do Voice cloning ?

Asked on 2024-07-30

1 search

Voice cloning wasn't specifically covered in the provided context from WWDC. However, there are several sessions that discuss related topics in machine learning and AI, which could be relevant if you are looking to build or enhance voice-related features in your app.

-

What’s new in Create ML: This session covers enhancements to the CreateML app, which can be used to create custom machine learning models, including those for sound analysis.

-

Bring your machine learning and AI models to Apple silicon: This session discusses updates to tools that help deploy machine learning models on Apple silicon, which could be useful for optimizing voice models.

-

Deploy machine learning and AI models on-device with Core ML: This session provides insights into deploying machine learning models on-device, which is crucial for applications requiring real-time voice processing.

For more specific guidance on voice cloning, you might need to look into additional resources or sessions that focus on natural language processing and speech synthesis.

Platforms State of the Union

Discover the newest advancements on Apple platforms.

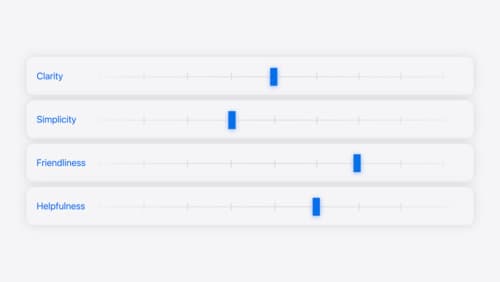

Add personality to your app through UX writing

Every app has a personality that comes across in what you say — and how you say it. Learn how to define your app’s voice and modulate your tone for every situation, from celebratory notifications to error messages. We’ll help you get specific about your app’s purpose and audience and practice writing in different tones.

What’s new in Create ML

Explore updates to Create ML, including interactive data source previews and a new template for building object tracking models for visionOS apps. We’ll also cover important framework improvements, including new time-series forecasting and classification APIs.