how do I run stable diffusion on my iphone

Asked on 2024-07-30

2 searches

To run Stable Diffusion on your iPhone, you can follow these steps as outlined in the WWDC sessions:

-

Model Preparation:

- Start with a pre-trained model, such as one from PyTorch.

- Convert the model into Core ML format using Core ML tools. This conversion process includes optimizing the model for performance on Apple devices. Techniques like quantization and efficient key-value caching can be used to enhance performance.

-

Integration with Core ML:

- Use the Core ML framework to load and execute the model within your app. Core ML optimizes hardware-accelerated execution across the CPU, GPU, and Neural Engine, ensuring efficient performance.

-

Running the Model:

- Once the model is converted and integrated, you can run it on your iPhone. Core ML supports a wide array of models, including Stable Diffusion, and provides tools to further optimize the model's performance.

For a detailed walkthrough, you can refer to the following sessions:

- Explore machine learning on Apple platforms (07:32)

- Platforms State of the Union (16:37)

- Deploy machine learning and AI models on-device with Core ML (14:15)

These sessions provide comprehensive guidance on preparing, converting, and running machine learning models, including Stable Diffusion, on Apple devices.

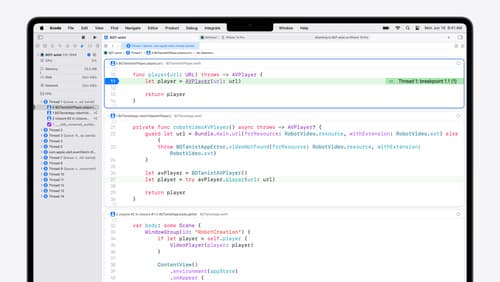

What’s new in Xcode 16

Discover the latest productivity and performance improvements in Xcode 16. Learn about enhancements to code completion, diagnostics, and Xcode Previews. Find out more about updates in builds and explore improvements in debugging and Instruments.

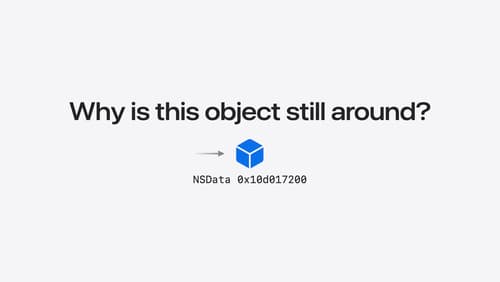

Analyze heap memory

Dive into the basis for your app’s dynamic memory: the heap! Explore how to use Instruments and Xcode to measure, analyze, and fix common heap issues. We’ll also cover some techniques and best practices for diagnosing transient growth, persistent growth, and leaks in your app.

Deploy machine learning and AI models on-device with Core ML

Learn new ways to optimize speed and memory performance when you convert and run machine learning and AI models through Core ML. We’ll cover new options for model representations, performance insights, execution, and model stitching which can be used together to create compelling and private on-device experiences.