AI Models On-Device

Asked on 2025-06-11

1 search

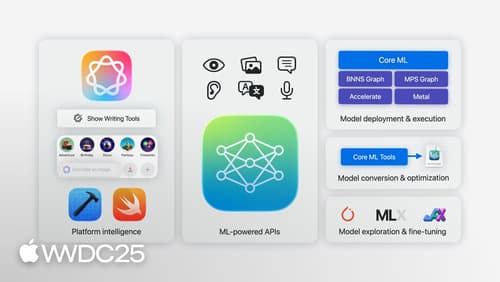

Apple's WWDC sessions provide a comprehensive overview of deploying AI models on-device, particularly using Core ML. Here are some key points from the sessions:

-

Core ML: This framework is central to deploying machine learning and AI models on Apple devices. It allows for efficient on-device inference by leveraging Apple Silicon's CPU, GPU, and neural engine. Core ML supports a wide range of model types and offers tools for optimizing model performance, such as quantization and efficient key-value caching in large language models (LLMs).

-

Foundation Models Framework: This new framework provides access to on-device intelligence, enabling features like summarization, extraction, and classification. It supports large language models optimized for device-scale use cases, ensuring privacy and offline functionality.

-

Machine Learning Frameworks: Apple offers various frameworks for specific domains, such as Vision for image analysis, Natural Language for text processing, and Sound Analysis for audio recognition. These frameworks are designed to be highly optimized for on-device execution.

-

Performance Tools: Updates to Core ML performance tools help developers profile and debug models, ensuring efficient deployment and execution on Apple devices.

-

Integration with Xcode: Core ML is tightly integrated with Xcode, simplifying the development workflow and enabling seamless model integration into apps.

For more detailed information on deploying machine learning and AI models on-device with Core ML, you can refer to the session Deploy machine learning and AI models on-device with Core ML (00:01:07).

Deploy machine learning and AI models on-device with Core ML

Learn new ways to optimize speed and memory performance when you convert and run machine learning and AI models through Core ML. We’ll cover new options for model representations, performance insights, execution, and model stitching which can be used together to create compelling and private on-device experiences.

Discover machine learning & AI frameworks on Apple platforms

Tour the latest updates to machine learning and AI frameworks available on Apple platforms. Whether you are an app developer ready to tap into Apple Intelligence, an ML engineer optimizing models for on-device deployment, or an AI enthusiast exploring the frontier of what is possible, we’ll offer guidance to help select the right tools for your needs.

Platforms State of the Union

Discover the newest advancements on Apple platforms.