AVCaptureMultiCamSession

Asked on 2025-06-30

1 search

The AVCaptureMultiCamSession is not directly mentioned in the provided context. However, the context does discuss various aspects of camera capture and control using AVFoundation and related APIs, which are often used in conjunction with AVCaptureMultiCamSession for advanced camera functionalities.

For more detailed information on camera capture and control, you might find the session "Enhancing your camera experience with capture controls" from WWDC 2025 useful. This session covers how to improve user interaction with capture controls and programmatically map physical button gestures to camera actions.

If you are interested in cinematic video capture, the session "Capture cinematic video in your app" from WWDC 2025 provides insights into creating a great capture experience for cinematic videos using new APIs.

For specific details on AVCaptureMultiCamSession, you might want to explore Apple's developer documentation or other WWDC sessions that focus on multi-camera setups.

Enhancing your camera experience with capture controls

Learn how to customize capture controls in your camera experiences. We’ll show you how to take photos with all physical capture controls, including new AirPods support, and how to adjust settings with Camera Control.

Capture cinematic video in your app

Discover how the Cinematic Video API enables your app to effortlessly capture cinema-style videos. We’ll cover how to configure a Cinematic capture session and introduce the fundamentals of building a video capture UI. We’ll also explore advanced Cinematic features such as applying a depth of field effect to achieve both tracking and rack focus.

Build compelling spatial photo and video experiences

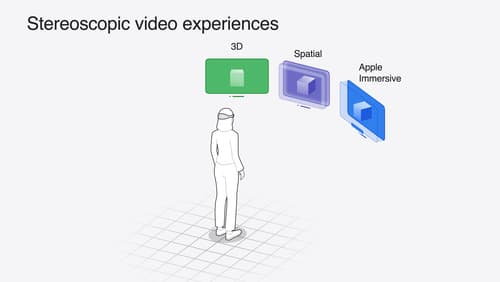

Learn how to adopt spatial photos and videos in your apps. Explore the different types of stereoscopic media and find out how to capture spatial videos in your iOS app on iPhone 15 Pro. Discover the various ways to detect and present spatial media, including the new QuickLook Preview Application API in visionOS. And take a deep dive into the metadata and stereo concepts that make a photo or video spatial.