how can i use a local llm model in xcode 26?

Asked on 2025-06-12

1 search

To use a local LLM (Large Language Model) in Xcode 26, you can follow these steps:

-

Enable Local Models: Xcode 26 allows you to use local models running on your Mac or a private network. You can configure this in Xcode's preferences by adding providers like Ollama and LM Studio, which support local models.

-

Switch Between Models: Once you have configured a set of models, you can quickly switch between them in the coding assistant when starting a new conversation. This feature is part of the new intelligence capabilities in Xcode, which also includes integration with large language models like ChatGPT for coding assistance.

-

Use MLX for Local LLMs: If you are interested in running large language models specifically on Apple Silicon, you can use MLX, a Python library and set of command-line applications designed for this purpose. MLX allows you to perform inference and fine-tune models locally on your Mac, without the need for cloud resources.

For more detailed information, you can refer to the session What’s new in Xcode (10:37) which covers the new intelligence features in Xcode, including the use of local models. Additionally, the session Explore large language models on Apple silicon with MLX (03:07) provides insights into using MLX for running large language models on Apple Silicon.

What’s new in Xcode

Discover the latest productivity and performance advancements in Xcode 26. Learn how to leverage large language models in your development workflow. Explore editing and debugging enhancements, improved performance and testing tools, and Swift Build - the open-source build system engine used by Xcode.

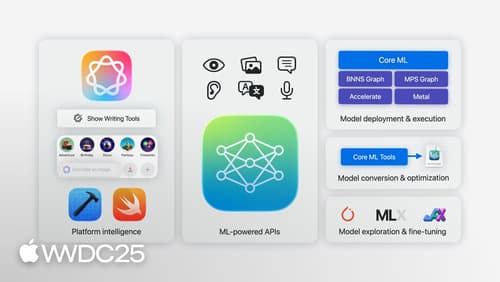

Discover machine learning & AI frameworks on Apple platforms

Tour the latest updates to machine learning and AI frameworks available on Apple platforms. Whether you are an app developer ready to tap into Apple Intelligence, an ML engineer optimizing models for on-device deployment, or an AI enthusiast exploring the frontier of what is possible, we’ll offer guidance to help select the right tools for your needs.

Platforms State of the Union

Discover the newest advancements on Apple platforms.