how can i use MLX?

Asked on 2025-06-24

1 search

To use MLX, you can follow these steps:

-

Installation: You can easily install MLX in Python by running the command

pip3 install mlx. This will allow you to start performing computations on arrays by importing MLX in your Python files. -

Basic Operations: Once installed, you can create arrays and perform basic operations on them, similar to how you would with NumPy. For example, you can add two integer arrays and inspect their shape and data type.

-

Advanced Features: MLX offers several advanced features such as unified memory, lazy evaluation, and function transformations. It also includes higher-level packages for building and optimizing neural networks, making it a versatile tool for machine learning on Apple Silicon.

-

Language Support: MLX provides APIs in Python, Swift, C, and C++. This allows you to use MLX in your preferred programming language to run machine learning models on Apple devices like Mac, iPhone, iPad, and Vision Pro.

-

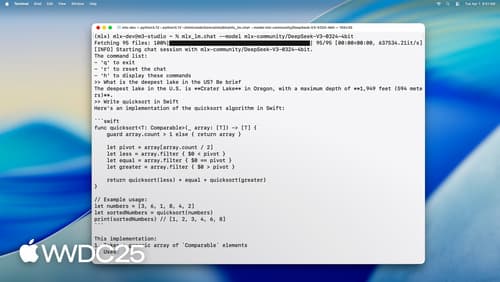

Large Language Models: If you're interested in working with large language models, MLX provides the MLX LM package, which is a Python library and set of command-line applications designed for this purpose. It allows you to perform text generation, model fine-tuning, and more.

-

Swift Integration: For Swift developers, MLX offers a fully featured API that integrates seamlessly with Swift applications. You can add MLX as a package to your Xcode project to start building with it.

For more detailed information, you can refer to the session Get started with MLX for Apple silicon (04:21) and Explore large language models on Apple silicon with MLX (03:07) from WWDC 2025.

Get started with MLX for Apple silicon

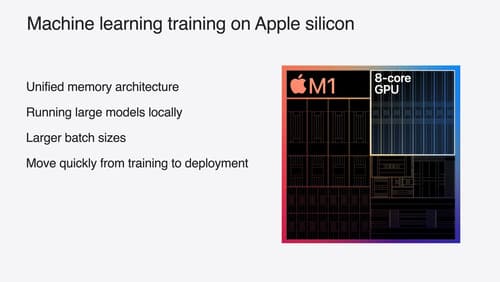

MLX is a flexible and efficient array framework for numerical computing and machine learning on Apple silicon. We’ll explore fundamental features including unified memory, lazy computation, and function transformations. We’ll also look at more advanced techniques for building and accelerating machine learning models across Apple’s platforms using Swift and Python APIs.

Explore large language models on Apple silicon with MLX

Discover MLX LM – designed specifically to make working with large language models simple and efficient on Apple silicon. We’ll cover how to fine-tune and run inference on state-of-the-art large language models on your Mac, and how to seamlessly integrate them into Swift-based applications and projects.

Train your machine learning and AI models on Apple GPUs

Learn how to train your models on Apple Silicon with Metal for PyTorch, JAX and TensorFlow. Take advantage of new attention operations and quantization support for improved transformer model performance on your devices.