How do I create Speech input that gets filtered and combined with text from my own database

Asked on 2025-06-11

1 search

To create a speech input that gets filtered and combined with text from your own database, you can use the SpeechAnalyzer API introduced by Apple. This API allows you to perform speech-to-text processing on-device with minimal code. Here's a general approach based on the information from the WWDC session "Bring advanced speech-to-text to your app with SpeechAnalyzer":

-

Set Up SpeechAnalyzer: Use the SpeechAnalyzer class to manage an analysis session. You can add a transcriber module to perform speech-to-text processing. This involves passing audio buffers to the analyzer, which processes them asynchronously.

-

Transcription: The Speech Transcriber module will convert spoken audio into text. This text can be processed further or displayed in your application. The transcription results are provided as attributed strings, which include timing data for synchronization with audio playback.

-

Combine with Database Text: Once you have the transcribed text, you can filter or combine it with text from your own database. This could involve searching your database for related content or appending the transcribed text to existing entries.

-

Handle Results: The API provides both volatile (real-time) and finalized (best guess) results. You can choose to display volatile results with lighter opacity and replace them with finalized results as they become available.

For more detailed guidance, you can refer to the session Bring advanced speech-to-text to your app with SpeechAnalyzer (02:41) which covers the SpeechAnalyzer API and its capabilities.

Bring advanced speech-to-text to your app with SpeechAnalyzer

Discover the new SpeechAnalyzer API for speech to text. We’ll learn about the Swift API and its capabilities, which power features in Notes, Voice Memos, Journal, and more. We’ll dive into details about how speech to text works and how SpeechAnalyzer and SpeechTranscriber can enable you to create exciting, performant features. And you’ll learn how to incorporate SpeechAnalyzer and live transcription into your app with a code-along.

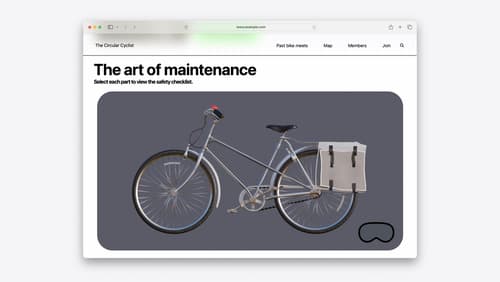

Optimize for the spatial web

Discover how to make the most of visionOS capabilities on the web. Explore recent updates like improvements to selection highlighting, and the ability to present spatial photos and panorama images in fullscreen. Learn to take advantage of existing web standards for dictation and text-to-speech with WebSpeech, spatial soundscapes with WebAudio, and immersive experiences with WebXR.

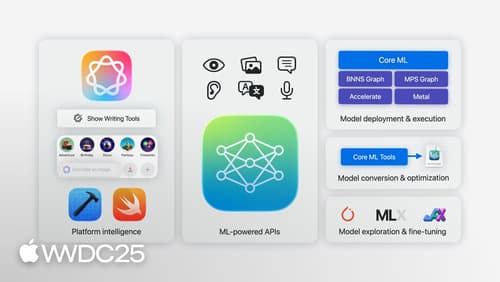

Discover machine learning & AI frameworks on Apple platforms

Tour the latest updates to machine learning and AI frameworks available on Apple platforms. Whether you are an app developer ready to tap into Apple Intelligence, an ML engineer optimizing models for on-device deployment, or an AI enthusiast exploring the frontier of what is possible, we’ll offer guidance to help select the right tools for your needs.