How powerful is ai session

Asked on 2025-06-12

1 search

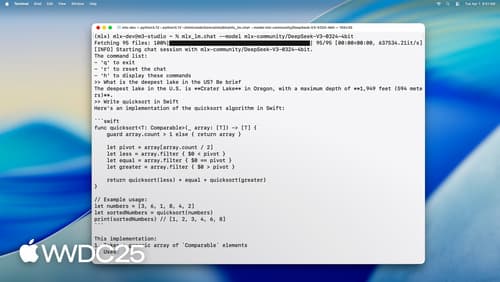

The AI session at WWDC, particularly the one titled "Explore large language models on Apple silicon with MLX," highlights the powerful capabilities of running large language models on Apple Silicon. With MLX, you can perform inference and fine-tune massive models directly on your Mac. For instance, the session discusses running Deepseek, an AI model with 670 billion parameters, on an M3 Ultra with 512 gigabytes of unified memory. This setup allows for smooth real-time interaction and generation at faster than reading speeds, even with models containing hundreds of billions of parameters, all running locally on your Mac desktop.

For more details, you can check out the session Explore large language models on Apple silicon with MLX (00:00:59).

Explore large language models on Apple silicon with MLX

Discover MLX LM – designed specifically to make working with large language models simple and efficient on Apple silicon. We’ll cover how to fine-tune and run inference on state-of-the-art large language models on your Mac, and how to seamlessly integrate them into Swift-based applications and projects.

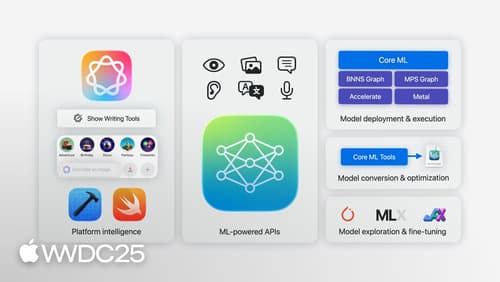

Discover machine learning & AI frameworks on Apple platforms

Tour the latest updates to machine learning and AI frameworks available on Apple platforms. Whether you are an app developer ready to tap into Apple Intelligence, an ML engineer optimizing models for on-device deployment, or an AI enthusiast exploring the frontier of what is possible, we’ll offer guidance to help select the right tools for your needs.

Platforms State of the Union

Discover the newest advancements on Apple platforms.