How to recognize the speakers when using the SpeechTranscribe in iOS 26?

Asked on 2025-06-16

1 search

In iOS 26, the SpeechTranscribe feature is part of the new Speech Analyzer API, which supports advanced speech-to-text capabilities. However, the context provided does not specifically mention speaker recognition as a feature of the SpeechTranscribe in iOS 26. The focus is on transcribing audio to text, supporting long-form and conversational use cases, and ensuring privacy by running entirely on-device.

For more detailed information on the Speech Analyzer API and its capabilities, you can refer to the session Bring advanced speech-to-text to your app with SpeechAnalyzer (02:41).

Bring advanced speech-to-text to your app with SpeechAnalyzer

Discover the new SpeechAnalyzer API for speech to text. We’ll learn about the Swift API and its capabilities, which power features in Notes, Voice Memos, Journal, and more. We’ll dive into details about how speech to text works and how SpeechAnalyzer and SpeechTranscriber can enable you to create exciting, performant features. And you’ll learn how to incorporate SpeechAnalyzer and live transcription into your app with a code-along.

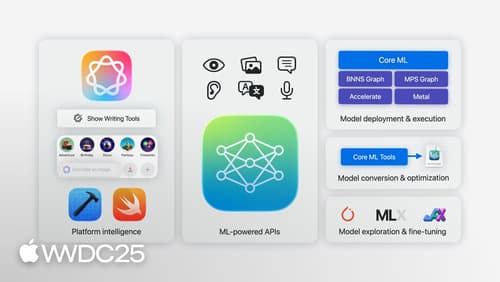

Discover machine learning & AI frameworks on Apple platforms

Tour the latest updates to machine learning and AI frameworks available on Apple platforms. Whether you are an app developer ready to tap into Apple Intelligence, an ML engineer optimizing models for on-device deployment, or an AI enthusiast exploring the frontier of what is possible, we’ll offer guidance to help select the right tools for your needs.

What’s new in visionOS 26

Explore exciting new features in visionOS 26. Discover enhanced volumetric APIs and learn how you can combine the power of SwiftUI, RealityKit and ARKit. Find out how you can build more engaging apps and games using faster hand tracking and input from spatial accessories. Get a sneak peek at updates to SharePlay, Compositor Services, immersive media, spatial web, Enterprise APIs, and much more.