How to use local models from apple

Asked on 2025-06-11

1 search

To use local models from Apple, you can leverage several tools and frameworks presented at WWDC. Here are some key points:

-

Xcode Integration: Xcode allows you to use local models running on your Mac or a private network. You can configure various providers, including local models, using tools like Ollama and LM Studio. This setup is managed through Xcode's preferences, enabling you to switch between models easily (What’s new in Xcode).

-

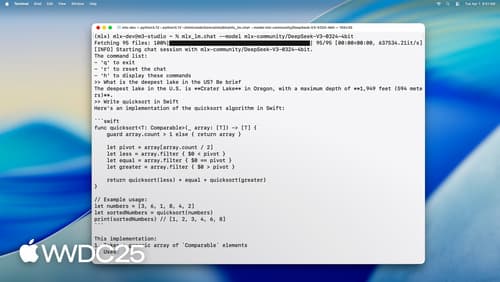

MLX for Apple Silicon: MLX is a Python library and set of command-line applications designed to run large language models locally on Apple Silicon. It supports text generation, model quantization for faster inference, and fine-tuning on your own data. MLX can be used from both Python and Swift (Explore large language models on Apple silicon with MLX).

-

Core ML Framework: Core ML is a key framework for deploying machine learning models on Apple devices. It allows you to convert models into the Core ML format, optimize them for Apple hardware, and run them efficiently using the CPU, GPU, and neural engine. Core ML supports a wide range of models, including large language and diffusion models (Platforms State of the Union).

-

CreateML and CoreML Tools: These tools enable you to train, fine-tune, and deploy models on Apple devices. CreateML allows for on-device training and fine-tuning, while CoreML Tools help in converting and optimizing models for Apple Silicon (Integrate privacy into your development process).

These resources provide a comprehensive approach to using local models on Apple platforms, leveraging the power of Apple Silicon and the integration capabilities of Xcode and Core ML.

What’s new in Xcode

Discover the latest productivity and performance advancements in Xcode 26. Learn how to leverage large language models in your development workflow. Explore editing and debugging enhancements, improved performance and testing tools, and Swift Build - the open-source build system engine used by Xcode.

Explore large language models on Apple silicon with MLX

Discover MLX LM – designed specifically to make working with large language models simple and efficient on Apple silicon. We’ll cover how to fine-tune and run inference on state-of-the-art large language models on your Mac, and how to seamlessly integrate them into Swift-based applications and projects.

Explore machine learning on Apple platforms

Get started with an overview of machine learning frameworks on Apple platforms. Whether you’re implementing your first ML model, or an ML expert, we’ll offer guidance to help you select the right framework for your app’s needs.