How would I build a screenshot analyzer with apple foundationodels and vision

Asked on 2025-06-11

1 search

To build a screenshot analyzer using Apple's Foundation Models and Vision framework, you can leverage the capabilities of the Vision framework, which offers a wide range of APIs for image analysis. Here's a general approach to get you started:

-

Use the Vision Framework: The Vision framework provides APIs for various image analysis tasks, such as text recognition, object detection, and more. You can use these APIs to analyze screenshots and extract relevant information.

-

Text Recognition: If your goal is to extract text from screenshots, you can use the text recognition capabilities of the Vision framework. The framework has been enhanced to provide document recognition, which can group different document structures, making it easier to process and understand documents. For more details, you can refer to the session Read documents using the Vision framework.

-

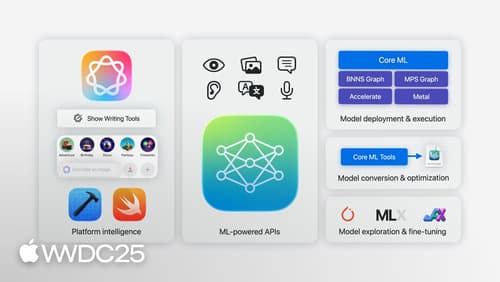

Foundation Models: Apple's Foundation Models framework allows you to tap into on-device intelligence for various tasks. You can use this framework to enhance your app's features with machine learning capabilities. For more information, you can check out the session Discover machine learning & AI frameworks on Apple platforms.

-

Swift Enhancements: The Vision framework has introduced a new API with streamlined syntax designed for Swift, making it easier to integrate into your apps. Additionally, Swift concurrency can be used to optimize the processing of multiple images. For more details, see the session Discover Swift enhancements in the Vision framework.

By combining these tools and frameworks, you can build a robust screenshot analyzer that leverages Apple's latest machine learning and vision technologies.

Discover machine learning & AI frameworks on Apple platforms

Tour the latest updates to machine learning and AI frameworks available on Apple platforms. Whether you are an app developer ready to tap into Apple Intelligence, an ML engineer optimizing models for on-device deployment, or an AI enthusiast exploring the frontier of what is possible, we’ll offer guidance to help select the right tools for your needs.

What’s new in visionOS 26

Explore exciting new features in visionOS 26. Discover enhanced volumetric APIs and learn how you can combine the power of SwiftUI, RealityKit and ARKit. Find out how you can build more engaging apps and games using faster hand tracking and input from spatial accessories. Get a sneak peek at updates to SharePlay, Compositor Services, immersive media, spatial web, Enterprise APIs, and much more.

Discover Swift enhancements in the Vision framework

The Vision Framework API has been redesigned to leverage modern Swift features like concurrency, making it easier and faster to integrate a wide array of Vision algorithms into your app. We’ll tour the updated API and share sample code, along with best practices, to help you get the benefits of this framework with less coding effort. We’ll also demonstrate two new features: image aesthetics and holistic body pose.