Image crop\

Asked on 2025-06-17

1 search

It seems you're interested in image cropping techniques discussed at Apple's WWDC. Here are some relevant insights from the sessions:

-

BNNS Graph: The session "What’s new in BNNS Graph" discusses using the Graph Builder API to perform image cropping. It explains how to define pixel buffers and use Swift subscripts for slicing operations to crop images efficiently without additional memory overhead. This is particularly useful for pre-processing images before passing them to machine learning models. You can learn more about this in the BNNSGraphBuilder chapter.

-

Vision Framework: In the session "Discover Swift enhancements in the Vision framework," there's a focus on using the

generateObjectnessBasedSaliencyImageRequestto identify the main subjects in an image and create a crop around them. This approach is optimized using Swift concurrency to handle multiple images efficiently. For more details, check out the Optimize with Swift Concurrency chapter. -

HDR Image Editing: The session "Use HDR for dynamic image experiences in your app" mentions applying a stretch crop filter to both SDR and HDR images to maintain consistency in editing. This is part of the broader discussion on editing strategies for HDR content. You can explore this in the Edit strategies chapter.

These sessions provide a comprehensive look at different methods and tools for image cropping and processing within Apple's ecosystem.

What’s new in BNNS Graph

The BNNS Graph Builder API now enables developers to write graphs of operations using the familiar Swift language to generate pre- and post-processing routines and small machine-learning models. BNNS compiles graphs ahead of execution and supports real-time and latency-sensitive use cases such as audio processing. In this session, we revisit last year’s bit-crusher example and simplify the Swift component by removing the reliance on a separate Python file and instead implement the audio effect entirely in Swift. The BNNS Graph Builder API is also suited to pre-processing image data before passing that data to a machine learning model. The session also includes a demonstration of clipping the transparent pixels from an image with an alpha channel.

Discover Swift enhancements in the Vision framework

The Vision Framework API has been redesigned to leverage modern Swift features like concurrency, making it easier and faster to integrate a wide array of Vision algorithms into your app. We’ll tour the updated API and share sample code, along with best practices, to help you get the benefits of this framework with less coding effort. We’ll also demonstrate two new features: image aesthetics and holistic body pose.

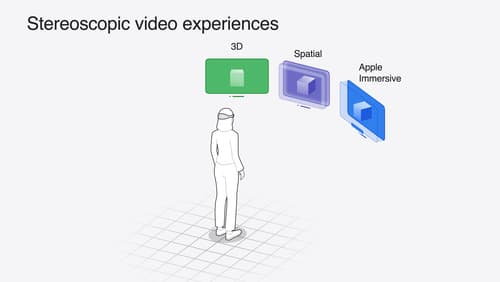

Build compelling spatial photo and video experiences

Learn how to adopt spatial photos and videos in your apps. Explore the different types of stereoscopic media and find out how to capture spatial videos in your iOS app on iPhone 15 Pro. Discover the various ways to detect and present spatial media, including the new QuickLook Preview Application API in visionOS. And take a deep dive into the metadata and stereo concepts that make a photo or video spatial.