is foundation model framework multi-modal

Asked on 2025-06-13

2 searches

The Foundation Models framework introduced by Apple at WWDC is primarily focused on providing access to an on-device large language model. It is designed for tasks such as text extraction, summarization, and content generation. However, the context provided does not explicitly mention that the Foundation Models framework is multi-modal. It seems to be focused on language-based tasks and does not indicate support for other modalities like images or audio.

For more detailed information, you might want to check out the session Meet the Foundation Models framework which provides an overview of the framework and its capabilities.

Platforms State of the Union

Discover the newest advancements on Apple platforms.

Meet the Foundation Models framework

Learn how to tap into the on-device large language model behind Apple Intelligence! This high-level overview covers everything from guided generation for generating Swift data structures and streaming for responsive experiences, to tool calling for integrating data sources and sessions for context management. This session has no prerequisites.

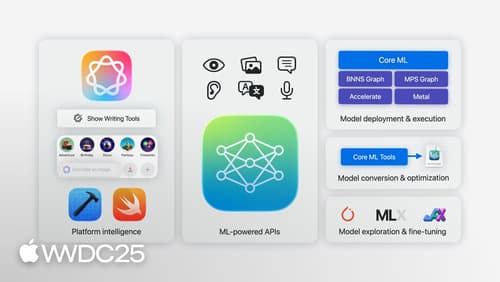

Discover machine learning & AI frameworks on Apple platforms

Tour the latest updates to machine learning and AI frameworks available on Apple platforms. Whether you are an app developer ready to tap into Apple Intelligence, an ML engineer optimizing models for on-device deployment, or an AI enthusiast exploring the frontier of what is possible, we’ll offer guidance to help select the right tools for your needs.