New object detection api for vision os

Asked on 2025-06-11

1 search

Apple introduced a new object tracking API for visionOS at WWDC 2024. This API allows developers to attach virtual content to individual objects found around the user, enhancing interactivity in spatial computing experiences. The API is part of the Vision framework and is designed to work with Vision Pro, enabling apps to recognize and track specific real-world objects. This functionality can be used to attach virtual instructions or other content to physical objects, providing new dimensions of interactivity.

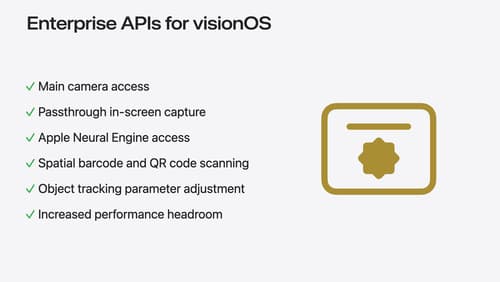

For more detailed information, you can refer to the session Explore object tracking for visionOS (05:07) which covers creating reference objects and anchoring virtual content. Additionally, the session Introducing enterprise APIs for visionOS (14:03) discusses enhanced known object tracking through parameter adjustment, which is part of the new features in VisionOS 2.0.

Introducing enterprise APIs for visionOS

Find out how you can use new enterprise APIs for visionOS to create spatial experiences that enhance employee and customer productivity on Apple Vision Pro.

Platforms State of the Union

Discover the newest advancements on Apple platforms.

Explore object tracking for visionOS

Find out how you can use object tracking to turn real-world objects into virtual anchors in your visionOS app. Learn how you can build spatial experiences with object tracking from start to finish. Find out how to create a reference object using machine learning in Create ML and attach content relative to your target object in Reality Composer Pro, RealityKit or ARKit APIs.