resources on making a good image classifier

Asked on 2025-06-26

1 search

To create a good image classifier, you can leverage several resources and tools presented at Apple's WWDC. Here are some key points and sessions that might be helpful:

-

Create ML: The session titled What’s new in Create ML provides an overview of enhancements in the Create ML app, which is a great starting point for building custom machine learning models, including image classifiers. The app allows you to integrate machine learning into your apps easily and provides tools for visualizing and debugging your annotations, which is crucial for ensuring your model behaves as expected.

-

Vision Framework: The session Discover Swift enhancements in the Vision framework highlights new APIs and capabilities for image classification and other computer vision tasks. The Vision framework offers various requests for image analysis, which can be useful for building and optimizing image classifiers.

-

Model Compression: The session Bring your machine learning and AI models to Apple silicon discusses model compression techniques, which are essential for deploying large models efficiently on devices with limited resources. Techniques like palletization and quantization can help reduce model size while maintaining accuracy.

These sessions provide a comprehensive set of tools and techniques to help you build and optimize image classifiers effectively.

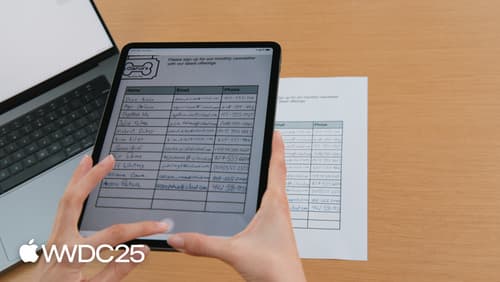

Read documents using the Vision framework

Learn about the latest advancements in the Vision framework. We’ll introduce RecognizeDocumentsRequest, and how you can use it to read lines of text and group them into paragraphs, read tables, etc. And we’ll also dive into camera lens smudge detection, and how to identify potentially smudged images in photo libraries or your own camera capture pipeline.

Bring your machine learning and AI models to Apple silicon

Learn how to optimize your machine learning and AI models to leverage the power of Apple silicon. Review model conversion workflows to prepare your models for on-device deployment. Understand model compression techniques that are compatible with Apple silicon, and at what stages in your model deployment workflow you can apply them. We’ll also explore the tradeoffs between storage size, latency, power usage and accuracy.

Discover Swift enhancements in the Vision framework

The Vision Framework API has been redesigned to leverage modern Swift features like concurrency, making it easier and faster to integrate a wide array of Vision algorithms into your app. We’ll tour the updated API and share sample code, along with best practices, to help you get the benefits of this framework with less coding effort. We’ll also demonstrate two new features: image aesthetics and holistic body pose.