Run local llm

Asked on 2025-06-16

1 search

To run local language models (LLMs) on your Mac, you can use tools like Ollama and LM Studio, as mentioned in the What’s new in Xcode session. These tools allow you to configure and switch between different local models in Xcode's preferences, enhancing your coding experience with code intelligence features.

What’s new in Xcode

Discover the latest productivity and performance advancements in Xcode 26. Learn how to leverage large language models in your development workflow. Explore editing and debugging enhancements, improved performance and testing tools, and Swift Build - the open-source build system engine used by Xcode.

Combine Metal 4 machine learning and graphics

Learn how to seamlessly combine machine learning into your graphics applications using Metal 4. We’ll introduce the tensor resource and ML encoder for running models on the GPU timeline alongside your rendering and compute work. Discover how shader ML lets you embed neural networks directly within your shaders for advanced effects and performance gains. We’ll also show new debugging tools for Metal 4 ML workloads in action using an example app.

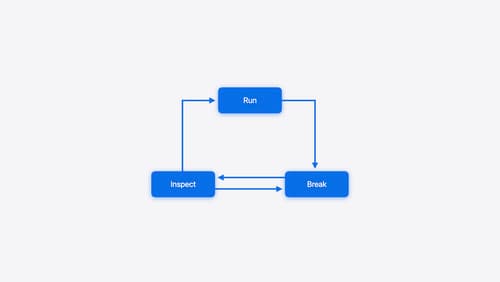

Run, Break, Inspect: Explore effective debugging in LLDB

Learn how to use LLDB to explore and debug codebases. We’ll show you how to make the most of crashlogs and backtraces, and how to supercharge breakpoints with actions and complex stop conditions. We’ll also explore how the “p” command and the latest features in Swift 6 can enhance your debugging experience.