Sessions referencing MLX, Apple's open source framework for fine tuning large language models

Asked on 2025-10-23

1 search

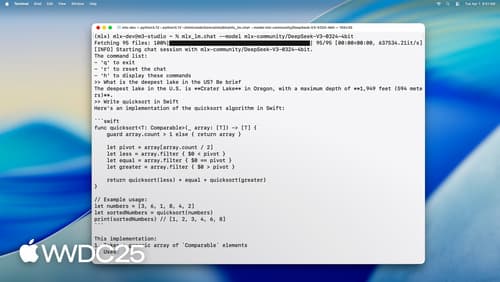

Apple's open-source framework, MLX, is designed for fine-tuning large language models on Apple Silicon. MLX is a powerful tool for numerical computing and machine learning, providing APIs in Python, Swift, C, and C++. It leverages Apple's unified memory architecture to efficiently run operations across CPU and GPU, making it ideal for running state-of-the-art models locally on devices like Macs.

Relevant Sessions

-

Explore large language models on Apple silicon with MLX

- This session provides an in-depth look at how MLX can be used to run and fine-tune large language models on Apple Silicon. It covers topics such as text generation, quantization, and fine-tuning. You can learn more about these capabilities in the session Explore large language models on Apple silicon with MLX.

-

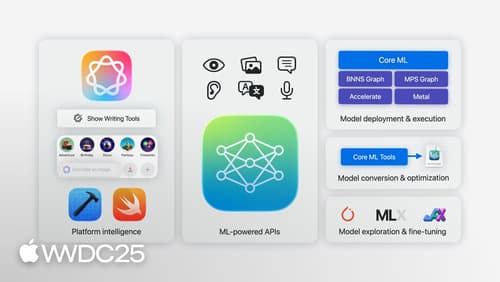

Discover machine learning & AI frameworks on Apple platforms

- This session discusses various machine learning tools available on Apple platforms, including MLX. It highlights MLX's ability to perform efficient fine-tuning and training on Apple Silicon. For more details, see Discover machine learning & AI frameworks on Apple platforms.

-

Get started with MLX for Apple silicon

- This session introduces MLX and its capabilities, including running large-scale machine learning models on Apple devices. It provides an overview of MLX's features and how to get started with it. Check out Get started with MLX for Apple silicon for more information.

Key Features of MLX

- Fine-Tuning: MLX allows for local fine-tuning of large language models on your Mac, ensuring data privacy and security.

- Unified Memory: Utilizes Apple's unified memory architecture for efficient operations across CPU and GPU.

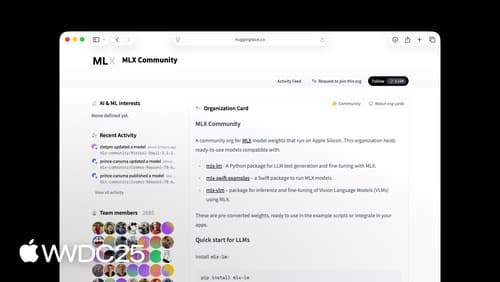

- Open Source: Fully open-source with a community on platforms like Hugging Face, providing access to a wide range of models.

- APIs: Available in multiple languages, including Python and Swift, making it accessible for various development needs.

For more detailed exploration of MLX and its applications, you can refer to the sessions mentioned above.

Explore large language models on Apple silicon with MLX

Discover MLX LM – designed specifically to make working with large language models simple and efficient on Apple silicon. We’ll cover how to fine-tune and run inference on state-of-the-art large language models on your Mac, and how to seamlessly integrate them into Swift-based applications and projects.

Discover machine learning & AI frameworks on Apple platforms

Tour the latest updates to machine learning and AI frameworks available on Apple platforms. Whether you are an app developer ready to tap into Apple Intelligence, an ML engineer optimizing models for on-device deployment, or an AI enthusiast exploring the frontier of what is possible, we’ll offer guidance to help select the right tools for your needs.

Get started with MLX for Apple silicon

MLX is a flexible and efficient array framework for numerical computing and machine learning on Apple silicon. We’ll explore fundamental features including unified memory, lazy computation, and function transformations. We’ll also look at more advanced techniques for building and accelerating machine learning models across Apple’s platforms using Swift and Python APIs.